Configuring Streaming Reading of Spark Driver Execution Results

Scenarios

When a query is executed in Spark, the result set may be large (potentially exceeding 100,000 records). In such cases, the JDBCServer may encounter an out-of-memory (OOM) error due to the volume of data being returned. To address this issue, we offer a data aggregation feature that minimizes the risk of OOM errors while maintaining overall system performance.

Configuration

Two configuration parameters are provided for data aggregation. These parameters can be set in the tuning options of the Spark JDBCServer. After applying the settings, restart the JDBCServer.

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark, click Configurations and then All Configurations, and search for the following parameters and adjust their values.

The parameters spark.sql.bigdata.thriftServer.useHdfsCollect and spark.sql.uselocalFileCollect cannot both be set to true simultaneously.

Table 1 Parameter description Parameter

Description

Example Value

spark.sql.bigdata.thriftServer.useHdfsCollect

Indicates whether to save result data to HDFS instead of the memory.

- true: The result data is saved to HDFS. However, the job description on the native JobHistory page cannot be associated with the corresponding SQL statement. In addition, the execution ID in the spark-beeline command output is null. To solve the JDBCServer OOM error and ensure correct information display, you are advised to set the spark.sql.userlocalFileCollect parameter.

Advantages: The query result is stored on HDFS. Therefore, JDBCServer OOM errors do not occur.

Disadvantages: The query is slow.

- false: This function is disabled.

false

spark.sql.uselocalFileCollect

Indicates whether to save result data to the local disk instead of memory.

- true: The query result of JDBCServer is aggregated to a local file.

Advantages: For small data volumes, the performance overhead is negligible compared to using native memory for data storage. For large data volumes (hundreds of millions of records), this approach significantly outperforms both HDFS-based storage and native memory.

Disadvantages: Optimization is required. In the case of large data volume, it is recommended that the JDBCServer driver memory be 10 GB and each core of the executor be allocated with 3 GB memory.

- false: This function is disabled.

false

spark.sql.collect.Hive

This parameter is valid only when spark.sql.uselocalFileCollect is set to true. It indicates whether to save the result data to a disk in direct serialization mode or in indirect serialization mode.

- true: The query result of JDBCServer is aggregated to a local file and stored in Hive data format.

Advantage: For queries on tables with a large number of partitions, this approach offers superior aggregation performance compared to directly storing query results on disk.

Disadvantages: The disadvantages are the same as those when spark.sql.uselocalFileCollect is enabled.

- false: This function is disabled.

false

spark.sql.collect.serialize

This parameter takes effect only when both spark.sql.uselocalFileCollect and spark.sql.collect.Hive are set to true. The function is to further improve performance.

- java: Data is collected in Java serialization mode.

- kryo: Data is collected in kryo serialization mode. The performance is better than that when the Java serialization mode is used.

java

- true: The result data is saved to HDFS. However, the job description on the native JobHistory page cannot be associated with the corresponding SQL statement. In addition, the execution ID in the spark-beeline command output is null. To solve the JDBCServer OOM error and ensure correct information display, you are advised to set the spark.sql.userlocalFileCollect parameter.

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

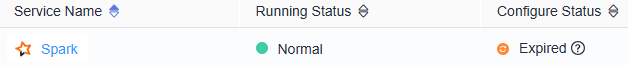

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot