Evaluating Datasets

Introduction to Data Evaluation

Data evaluation evaluates the data quality and representation of a dataset based on multiple dimensions, and detects and resolves potential problems. Generally, data evaluation is performed using the following methods:

Quality evaluation:

- Dataset quality evaluation: You can randomly select samples from a dataset and score the dataset quality manually or automatically.

- Sample quality evaluation: Evaluate the integrity, accuracy, and consistency of data samples to ensure that the data is not damaged, ambiguous, or contradictory.

Data representativeness evaluation:

- Domain coverage evaluation: Check whether the dataset can represent each domain involved in the pre-training job. For instance, the pre-training dataset of a general language model should include text from a wide range of industries—such as technology, finance, culture, and sports—to ensure the model can effectively handle inputs on diverse topics.

- Distribution rationality check: Analyze the distribution of data in different categories or features. If the data volume from a particular domain is excessively large, the model may become overly biased toward that domain.

- Data diversity evaluation: Check whether the data sources are diverse. For example, in the news domain, data should ideally be collected from multiple news sources.

Procedure

If you select the manual evaluation mode, you can create an evaluation task on ModelArts Studio.

To use ModelArts Studio to process data, do as follows:

- Log in to ModelArts Studio and access the desired workspace.

- In the navigation pane, choose Data Engineering > Data Management > Data Evaluation. On the displayed page, click Create Evaluation Standard in the upper right corner. If you want to use the preset evaluation standards, start from Step 6.

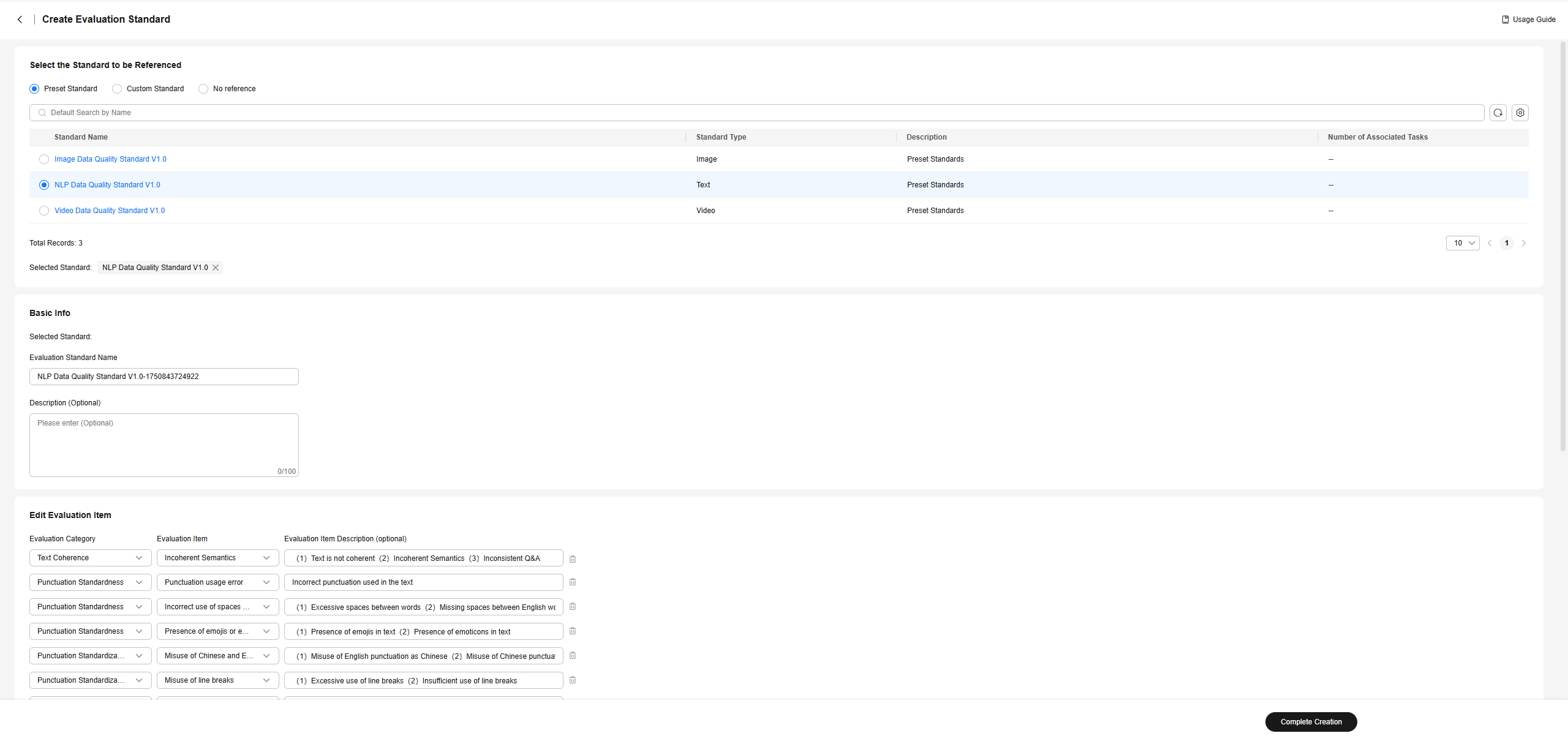

- On the Create Evaluation Standard page, select a preset standard to be referenced and set Evaluation Standard Name and Description.

- Edit evaluation items. You can delete evaluation items or create custom evaluation items as required. When creating a custom evaluation item, ensure that the evaluation type, evaluation item, and evaluation item description are clear and unambiguous.

Figure 1 Editing an evaluation item

- Click Complete Creation. After evaluation standards are created, you can view, edit, and delete them on the Manual Evaluation Standard page.

- Click Create Evaluation Task in the upper right corner of the page. On the Dataset Selection page, select the dataset to be evaluated and set the sampling specifications.

Figure 2 Creating an evaluation task

- Click Next Step and select the evaluation standard to be used. Click Next Step, and set the evaluator.

- Click Next Step, and enter the task name. Click Complete Creation to go to the Evaluation Task page. After the evaluation task is created, the task status is Created.

- Click Evaluate in the Operation column to go to the evaluation page.

Figure 3 Data evaluation

- On the evaluation page, label the problems of the current data by referring to the evaluation items. If the data does not meet the requirements, click Not Pass. If the data meets the requirements, click Pass. For a text dataset, you can right-click the question content to label the question.

- After all data is evaluated, check that the evaluation status is 100%, indicating that the current dataset has been evaluated. You can return to the Evaluation Task page and click Report in the Operation column to obtain the dataset quality evaluation report.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot