Combining and Publishing Datasets

Introduction to Data Combination

The quality and variety of data sources play a crucial role in the development of specific capabilities in LLMs. Based on their origin, pre-training data can be categorized into the following types:

- General text data and industry-specific text data. General text data, which includes web pages, books, and dialogs, is used to preserve a model's general-purpose capabilities and prevent overfitting in downstream tasks.

- Industry-specific text data is used to improve the model's capability of solving downstream tasks. For example, the data distribution for the Llama model is approximately as follows: 82% web page data, 6.5% code data, 4.5% book data, 4.5% encyclopedia data, and 2.5% paper data. However, for some large code models that focus more on code generation capabilities, more code data needs to be included.

During actual training, the ratio of general text data to industry-specific text data is crucial. If the proportion of industry-specific data is too high, the model may lose too many general capabilities. On the other hand, if the proportion is too low, the model may not effectively learn the necessary industry knowledge. Generally, the ratio of industry-specific data to general data is between 1:4 and 1:9. If the quality of industry-specific data is high, the proportion of industry-specific data can be increased. If you want to retain as many general capabilities as possible, it is advised to include more high-quality general data.

For different industry scenarios, a more suitable combination strategy should be considered:

- Healthcare: Patient consultation, case analysis, and drug recommendation are key services, and typically require accurate and high-quality domain-specific data. The data combination strategy should prioritize medical field data and real-world data from individual hospitals to ensure the model can effectively process professional text and more practical cases.

- Finance: Financial news, stock market analysis reports, and financial regulations are key data sources. The data combination strategy should primarily focus on financial news and financial reports. However, the actual implementation should take data quality into account. If certain datasets contain a high volume of low knowledge-density content (e.g., financial reports), their proportion in the training data should be reduced.

- Legal: The focus should be on legal provisions, case law, judicial documents, and contracts. This domain has high requirements for professionalism, and the data often includes numerous names and locations. Therefore, targeted data processing is necessary. The data combination strategy should emphasize domain-specific legal content and avoid over-inclusion of general data. Given the typically high quality of legal documents, the proportion of industry-specific data can be increased to enhance the model's professional performance.

- Customer service: This domain includes customer conversation logs, FAQ data, and customer service manuals. The data combination should focus on content related to user interaction and question-answering. Customer conversation data is generally of lower quality, so the proportion of industry-specific data can be reduced.

Procedure for Data Combination

You can use the dataset combination function on ModelArts Studio as follows:

- Log in to ModelArts Studio and access the desired workspace.

- In the navigation pane, choose Data Engineering > Data Processing > Combine Task. On the displayed page, click Create data combine in the upper right corner.

- Select Text > Pre-trained Text, select the datasets to be combined, and click Next.

Figure 1 Selecting datasets to be combined

- Enter the proportion of records configured for each dataset and click Next.

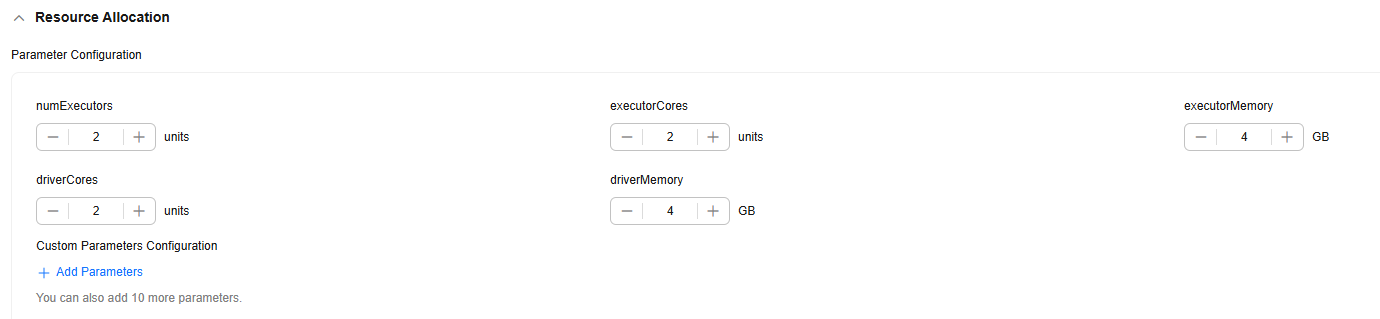

- Set Resource Allocation, Dataset Name, Description, and Extended Info.

Resource Allocation:

Click

to expand resource configuration and set task resources. You can also customize parameters. Click Add Parameters and enter the parameter name and value.

to expand resource configuration and set task resources. You can also customize parameters. Click Add Parameters and enter the parameter name and value.Table 1 Parameter configuration Parameter

Description

numExecutors

Number of executors. The default value is 2. An executor is a process running on a worker node. It executes tasks and returns the calculation result to the driver. One core in an executor can run one task at the same time. Therefore, more tasks can be processed at the same time if you increase the number of the executors. You can add executors (if they are available) to process more tasks concurrently and improve efficiency.

The minimum value of the product of numExecutors and executorMemory is 4, and the maximum value is 16.

executorCores

Number of CPU cores used by each executor process. The default value is 2. Multiple cores in an executor can run multiple tasks at the same time, which increases the task concurrency. However, because all cores share the memory of an executor, you need to balance the memory and the number of cores.

The minimum value of the product of numExecutors and executorMemory is 4, and the maximum value is 16. The ratio of executorCores to executorMemory must be in the range of 1:2 to 1:4.

executorMemory

Memory size used by each Executor process. The default value is 4. The executor memory is used for task execution and communication. You can increase the memory for a job that requires a great number of resources, and run small jobs concurrently with a smaller memory.

The ratio of executorCores to executorMemory must be in the range of 1:2 to 1:4.

driverCores

Number of CPU kernels used by each driver process. The default value is 2. The driver schedules jobs and communicates with executors.

The ratio of driverCores to driverMemory must be in the range of 1:2 to 1:4.

driverMemory

Memory used by the driver process. The default value is 4. The driver schedules jobs and communicates with executors. Add driver memory when the number and parallelism level of the tasks increases.

The ratio of driverCores to driverMemory must be in the range of 1:2 to 1:4.

Figure 2 Resource Allocation

Automatically Generate Processing Dataset

Select this option and configure the information for generating a processed dataset, as shown in Figure 3. Click Confirm in the lower right corner. The platform starts the data combination task. After the task is successfully executed, a processed dataset is automatically generated.

If you do not select this option, click OK in the lower right corner. The platform starts the data combination task. After the combination task is successfully executed, manually generate a processed dataset.

(Optional) Extended Info

You can select the industry and language, or customize dataset properties.

Figure 4 Extended Info

- Click OK in the lower right corner of the page to return to the Data Combine Task page. On this page, you can view the status of the dataset combination task. If the status is Dataset generated successfully, the dataset combination is successful.

Procedure for Data Publishing

To use data for subsequent operations such as model training, you need to publish the dataset in a specific format. Text datasets can be published in standard format or Pangu format. When the pre-training data is published, the two data formats are the same as those in the preprocessing phase.

To create a text dataset publishing task, perform the following steps:

- Log in to ModelArts Studio and access the desired workspace.

- In the navigation pane, choose Data Engineering > Data Publishing > Publish Task. On the displayed page, click Create Data Publish Task in the upper right corner.

- On the Create Data Publish Task page, select Text > Pre-trained Text for dataset modality, select a dataset, and click Next.

Figure 5 Creating a data publishing task

- In the Basic Configuration area, select the data usage, dataset visibility, application scenario, and format.

- Set the parameters, dataset name, description, and extended information, and click Next.

Table 2 Parameter configuration Parameter

Description

numExecutors

Number of executors. The default value is 2. An executor is a process running on a worker node. It executes tasks and returns the calculation result to the driver. One core in an executor can run one task at the same time. Therefore, more tasks can be processed at the same time if you increase the number of the executors. You can add executors (if they are available) to process more tasks concurrently and improve efficiency.

The minimum value of the product of numExecutors and executorMemory is 4, and the maximum value is 16.

executorCores

Number of CPU cores used by each executor process. The default value is 2. Multiple cores in an executor can run multiple tasks at the same time, which increases the task concurrency. However, because all cores share the memory of an executor, you need to balance the memory and the number of cores.

The minimum value of the product of numExecutors and executorMemory is 4, and the maximum value is 16. The ratio of executorCores to executorMemory must be in the range of 1:2 to 1:4.

executorMemory

Memory size used by each Executor process. The default value is 4. The executor memory is used for task execution and communication. You can increase the memory for a job that requires a great number of resources, and run small jobs concurrently with a smaller memory.

The ratio of executorCores to executorMemory must be in the range of 1:2 to 1:4.

driverCores

Number of CPU kernels used by each driver process. The default value is 2. The driver schedules jobs and communicates with executors.

The ratio of driverCores to driverMemory must be in the range of 1:2 to 1:4.

driverMemory

Memory used by the driver process. The default value is 4. The driver schedules jobs and communicates with executors. Add driver memory when the number and parallelism level of the tasks increases.

The ratio of driverCores to driverMemory must be in the range of 1:2 to 1:4.

- If the task status is Succeeded, the data publishing task is successfully executed. You can choose Data Engineering > Data Publishing > Datasets in the navigation pane and click the Published Dataset tab to view the published dataset.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot