Configuring HDFS EC Storage

Scenario

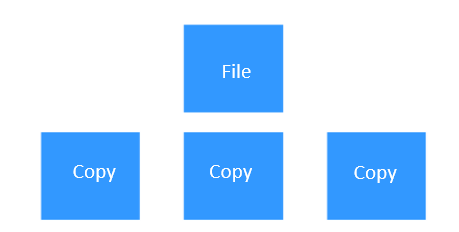

To ensure data reliability, HDFS stores three replicas by default, that is, when data is written, size of the space occupied is three times the size of the data. As a result, a large amount of space is wasted. To address this issue, HDFS introduces erasure coding (EC), a mature technology that has been applied to RAID disk arrays.

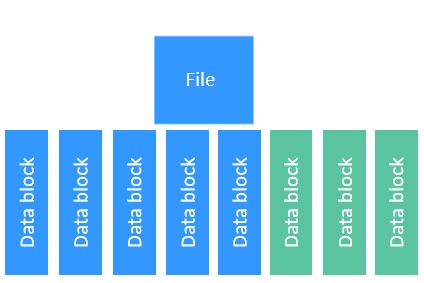

EC divides a file into small data blocks (blocks of the size of 128 KB, 256 KB, and 1 MB), stores these data blocks on multiple DataNodes, and stores the parity blocks generated by the EC encoding and decoding algorithm on other DataNodes. If a DataNode that stores data blocks is unavailable, the lost data block can be reversely calculated based on the parity blocks and other data blocks stored on other DataNodes.

- The Reed Solomon (RS) encoding and decoding algorithm is used.

- 6 DataNodes are used to store data blocks.

- 3 DataNodes are used to store parity blocks.

- A maximum of 3 blocks can be lost.

- The size of each file block is 1024 kB (equal to 1 MB).

- If the size of stored file is 100 MB, the total amount of data written to the DataNode is (1+3/6) x 100 MB = 150 MB. .

- The total size of data blocks is the file size: 100 MB.

- The total size of parity blocks is 3/6 x 100 MB = 50 MB.

The differences between the storage using the three-copy policy and the storage using the EC policy are as follows:

By using the storage with the EC policy, a large amount of storage space can be saved without compromising the reliability. For example, to achieve the same reliability of three-copy policy, the storage space with only 1.5 times of the original file size is required. In this way, half of the storage space is saved.

- HDFS has the following built-in EC encoding and decoding algorithms: XOR, RS-LEGACY (the old version of Reed-Solomon, which has poor performance and is not recommended), and RS (the new version of Reed-Solomon with the performance five times that of RS-LEGACY).

- HDFS has the following built-in EC policies: RS-3-2-1024k, RS-6-3-1024k, RS-10-4-1024k, RS-LEGACY-6-3-1024k, and XOR-2-1-1024k.

- HDFS allows the user-defined EC policy by running commands on the client.

- An EC policy can be configured only for directories, not for files.

- After an EC policy is configured for a directory, all directories that are not configured with the EC policy in that directory will inherit the EC policy.

- Files are distributed to multiple DataNodes during storing. Therefore, files cannot be read locally. As a result, data is de-affinity, causing serious data skew.

- When a file is written, calculation is needed to generate a parity block or verify the data block by a parity block, which consumes more CPU resources and increases complexity.

- When the DataNode is unavailable, lost data blocks need to be obtained by calculation, which consumes more CPU resources, increases the complexity, and lengthen the recovery time.

- When reading and writing files, the client needs to be connected with all related read/write data blocks and parity blocks at the same time.

- Currently, two basic FileSystem interfaces are not supported: append() and truncate(). When either of them is invoked, an exception is displayed. When concat() of the files with different EC policies is invoked, an exception is also displayed.

Based on the preceding restrictions and problems, EC feature is applicable to scenarios where a large amount of data is written at a time but not applicable to the scenarios with the following files:

- A large number of small files

- Files with small data volume

- Files that have been written for a long time after being opened

- Files that need to be modified by invoking the append() or truncate() interface

Impact on the System

Compared with the default replica storage, the EC storage has the following impacts on the system:

- NameNodes, DataNodes, and clients consume more CPU resources, memory, and network resources.

- If a DataNode is abnormal, it takes a longer time to restore data on the DataNode and reduces the read performance of related files.

To prevent these impacts, you are advised to perform the following operations for the cluster that will deploy EC storage based on the service load:

- Increase the values of memory parameters (mainly the value of -Xmx) in GC_OPTS of NameNode and DataNode.

- Increase the values of memory parameters of the HDFS client and upper-layer components that depend on HDFS.

- Increase the value of dfs.datanode.max.transfer.threads of the DataNode.

- If the number of DataNodes in the cluster is less than 20, you are advised to change the value of dfs.namenode.redundancy.considerLoad to false.

Prerequisites

- The HDFS client is installed and available. For details, see Using the HDFS Client.

- If the cluster is in security mode, create a user that belongs to supergroup group and run the kinit user command to log in to Kerberos.

- If the cluster is in common mode, run the export HADOOP_USER_NAME=omm command.

Operation Command Description

- To list all EC codec algorithms supported by the current cluster, run the hdfs ec -listCodecs command. Example:

[root@10-219-254-200 ~]#hdfs ec -listCodecs Erasure Coding Codecs: Codec [Coder List] RS [RS_NATIVE, RS_JAVA] RS-LEGACY [RS-LEGACY_JAVA] XOR [XOR_NATIVE, XOR_JAVA]

- To list all EC policies in the current cluster, run the hdfs ec -listPolicies command. Example:

[root@10-219-254-200 ~]#hdfs ec -listPolicies Erasure Coding Policies: ErasureCodingPolicy=[Name=RS-10-4-1024k, Schema=[ECSchema=[Codec=rs, numDataUnits=10, numParityUnits=4]], CellSize=1048576, Id=5], State=DISABLED ErasureCodingPolicy=[Name=RS-3-2-1024k, Schema=[ECSchema=[Codec=rs, numDataUnits=3, numParityUnits=2]], CellSize=1048576, Id=2], State=DISABLED ErasureCodingPolicy=[Name=RS-6-3-1024k, Schema=[ECSchema=[Codec=rs, numDataUnits=6, numParityUnits=3]], CellSize=1048576, Id=1], State=ENABLED ErasureCodingPolicy=[Name=RS-LEGACY-6-3-1024k, Schema=[ECSchema=[Codec=rs-legacy, numDataUnits=6, numParityUnits=3]], CellSize=1048576, Id=3], State=DISABLED ErasureCodingPolicy=[Name=XOR-2-1-1024k, Schema=[ECSchema=[Codec=xor, numDataUnits=2, numParityUnits=1]], CellSize=1048576, Id=4], State=ENABLED

- To enable a specified EC policy, run the hdfs ec -enablePolicy -policy <policyName> command. Example:

[root@10-219-254-200 ~]#hdfs ec -enablePolicy -policy RS-3-2-1024k Erasure coding policy RS-3-2-1024k is enabled

Only the EC policy in the ENABLED state can be set for a directory.

- To disable a specified EC policy, run the hdfs ec -disablePolicy -policy <policyName> command. Example:

[root@10-219-254-200 ~]#hdfs ec -disablePolicy -policy RS-3-2-1024k Erasure coding policy RS-3-2-1024k is disabled

- To add a customized EC policy, run the hdfs ec -addPolicies -policyFile <Configuration file path> command.

- First compile the configuration file of the customized EC policy. (For details, see <HDFS client installation directory >/HDFS/hadoop/etc/hadoop/user_ec_policies.xml.template sample.) Content of the sample is as follows:

<?xml version="1.0"?> <configuration> <!-- The version of EC policy XML file format, it must be an integer --> <layoutversion>1</layoutversion> <schemas> <!-- schema id is only used to reference internally in this document --> <schema id="XORk2m1"> <!-- The combination of codec, k, m and options as the schema ID, defines a unique schema, for example 'xor-2-1'. schema ID is case insensitive --> <!-- codec with this specific name should exist already in this system --> <codec>xor</codec> <k>2</k> <m>1</m> <options> </options> </schema> <schema id="RSk12m4"> <codec>rs</codec> <k>12</k> <m>4</m> <options> </options> </schema> <schema id="RS-legacyk12m4"> <codec>rs-legacy</codec> <k>12</k> <m>4</m> <options> </options> </schema> </schemas> <policies> <policy> <!-- the combination of schema ID and cellsize(in unit k) defines a unique policy, for example 'xor-2-1-256k', case insensitive --> <!-- schema is referred by its id --> <schema>XORk2m1</schema> <!-- cellsize must be an positive integer multiple of 1024(1k) --> <!-- maximum cellsize is defined by 'dfs.namenode.ec.policies.max.cellsize' property --> <cellsize>131072</cellsize> </policy> <policy> <schema>RS-legacyk12m4</schema> <cellsize>262144</cellsize> </policy> </policies> </configuration> - The customized EC policy is in the DISABLED state by default and can be used only after being enabled.

Example:

[root@10-219-254-200 ~]#hdfs ec -addPolicies -policyFile /opt/client/HDFS/hadoop/etc/hadoop/user_ec_policies.xml.template 2018-12-31 17:50:17,666 INFO util.ECPolicyLoader: Loading EC policy file /opt/client/HDFS/hadoop/etc/hadoop/user_ec_policies.xml.template Add ErasureCodingPolicy XOR-2-1-128k succeed. Add ErasureCodingPolicy RS-LEGACY-12-4-256k succeed.

- First compile the configuration file of the customized EC policy. (For details, see <HDFS client installation directory >/HDFS/hadoop/etc/hadoop/user_ec_policies.xml.template sample.) Content of the sample is as follows:

- To remove a specified EC policy, run the hdfs ec -removePolicy -policy <policy> command. Example:

[root@10-219-254-200 ~]#hdfs ec -removePolicy -policy XOR-2-1-128k Erasure coding policy XOR-2-1-128k is removed

- To set an EC policy of a directory, run the hdfs ec -setPolicy -path <path> [-policy <policy>] [-replicate] command. Example:

[root@10-219-254-200 ~]#hdfs ec -setPolicy -path /test -policy RS-6-3-1024k Set RS-6-3-1024k erasure coding policy on /test Warning: setting erasure coding policy on a non-empty directory will not automatically convert existing files to RS-6-3-1024k erasure coding policy

- After an EC policy is set for a directory, the storage mode of original files in the directory remains unchanged. The newly created files are stored according to the EC policy.

- The -replicate parameter is used to set a three-copy storage policy instead of an EC policy for a directory.

- -replicate and -policy <policyName> cannot be used at the same time.

- To get the EC policy of a specified directory, run the hdfs ec -getPolicy -path <path> command. Example:

[root@10-219-254-200 ~]#hdfs ec -getPolicy -path /test RS-6-3-1024k

- To disable the EC policy of a specified directory, run the hdfs ec -unsetPolicy -path <path> command. Example:

[root@10-219-254-200 ~]#hdfs ec -unsetPolicy -path /test Unset erasure coding policy from /test Warning: unsetting erasure coding policy on a non-empty directory will not automatically convert existing files to replicated data.

When the EC policy of a directory is disabled, it inherits the EC policy of the parent directory if the parent directory has an EC policy.

Suggestions on Using EC for Upper-Level Services

According to the current situation of EC storage, the following upper-level services are recommended to use EC: Yarn, MapReduce, HBase, Hive, and Spark2x.

The HDFS directories of each service that can use the EC storage are listed in Table 1:

Service |

HDFS directory |

Parameter |

Description |

|---|---|---|---|

Yarn |

/tmp/logs |

yarn.nodemanager.remote-app-log-dir |

Directory for storing container log files. |

/tmp/archived |

yarn.nodemanager.remote-app-log-archive-dir |

Directory for storing archived task log files. |

|

MapReduce |

/mr-history/tmp |

- |

Directory for storing logs generated by MapReduce jobs. |

/mr-history/done |

- |

Directory for storing logs managed by MR JobHistory Server. |

|

HBase |

/hbase/.tmp |

- |

Temporary directory for creating tables. |

/hbase/archive |

- |

Archive directory. |

|

/hbase/data |

- |

Table data directory. |

|

Hive |

/user/hive/warehouse |

hive.metastore.warehouse.dir |

Hive data warehouse directory. |

/tmp/hive-scratch |

hive.exec.scratchdir |

Temporary data directory run by Hive jobs. |

|

Spark2x |

/user/hive/warehouse |

hive.metastore.warehouse.dir |

Default directory for storing table data. |

The EC policy cannot be used for data directories listed in Table 2. Otherwise, services fail to run. If the EC policy is configured for these directories, HDFS automatically cancels the EC policy and sets the replica policy for these directories.

Service |

HDFS directory |

Parameter |

Description |

|---|---|---|---|

Public |

/tmp |

- |

HDFS temporary directory for storing temporary files. |

/user |

- |

HDFS user directory for storing user data. |

|

HBase |

/hbase |

hbase.data.rootdir |

HBase data root directory. |

/hbase/.hbase-snapshot |

- |

Table Snapshot directory. |

|

/hbase/.hbck |

- |

Directory for storing hfiles (such as out-of-bound hfiles) that fail the hbck check. |

|

/hbase/MasterProcWALs |

- |

Directory for storing Proc logs. |

|

/hbase/WALs |

- |

Directory for storing WALs. |

|

/hbase/corrupt |

- |

Directory for storing damaged HFiles. |

|

/hbase/oldWALs |

- |

Directory to which data in WALs is migrated. |

|

Spark2x |

/user/spark2x |

spark.yarn.stagingDir |

Directory for storing temporary files generated during Spark2x application running. |

/tmp/spark2x/sparkhive-scratch |

hive.exec.scratchdir |

Directory for storing temporary files generated during SQL execution on JDBCServer. |

|

/spark2xJobHistory2x |

spark.eventLog.dir |

Directory for storing event logs. |

|

/user/spark2x/jars |

- |

Directory for storing Spark2x JAR files. |

|

CarbonData |

/user/hive/warehouse/carbon.store/ |

carbon.storelocation |

Directory for storing data files. |

Flink |

/flink |

- |

Directory for storing Flink running snapshot information for task restoration. |

You can specify the list of HDFS directories for which the EC policy cannot be used by specifying dfs.no.ec.dirs. Use commons (,) to separate multiple values.

EC and Other Storage Policies

EC supports the following storage policies: HOT, COLD, and ALL_SSD.

If other storage policies (such as WARM, ONE_SSD, PROVIDED, and LAZY_PERSIST) are configured, the default storage policy (HOT) will be used.

Information about all blocks needs to be obtained for reading and writing EC files. To ensure the consistency of the read and write time of all blocks, the blocks need to be stored on the disks with the same performance. Only HOT, COLD, and ALL_SSD storage policies meet this condition.

Impact of EC on DistCp

If the source file of DistCp contains an EC file, different parameters need to be added based on different requirements.

- To preserve the EC policy of a file during the replication process, the -preserveec parameter needs to be added to the DistCp command.

- If the file is rewritten to a destination directory based on the EC policy (or replication policy) of the destination directory but not of the source file, the -skipcrccheck parameter needs to be added to the DistCp command.

If neither of the two parameters is added, the DistCp command task fails due to different parity values of the files with different EC policies.

Conversion Between Copy Files and EC Files

Convert a replica file into an EC file. (For example, convert all files in the /src directory into the EC files with the RS-6-3-1024k policy.)

- Run the following command to create a temporary directory (for example, /tmp/convert_tmp_dir, which must be a directory that does not exist) to temporarily store converted data.

hdfs dfs -mkdir /tmp/convert_tmp_dir

- Run the following command to enable the EC policy RS-6-3-1024k. (If it has been enabled, skip this step.)

hdfs ec -enablePolicy -policy RS-6-3-1024k

- Run the following command to set the EC policy of /tmp/convert_tmp_dir to RS-6-3-1024k.

hdfs ec -setPolicy -path /tmp/convert_tmp_dir -policy RS-6-3-1024k

- Run the following DistCp command to copy files. In this case, all files are written into the temporary directory based on the RS-6-3-1024k policy.

hadoop distcp -numListstatusThreads 40 -update -delete -skipcrccheck -pbugpaxt /src /tmp/convert_tmp_dir

- Run the following command to move /src to /src-to-delete.

hdfs dfs -mv /src /src-to-delete

- Run the following command to move /tmp/convert_tmp_dir to /src.

hdfs dfs -mv /tmp/convert_tmp_dir /src

- Run the following command to delete /src-to-delete.

hdfs dfs -rm -r -f /src-to-delete

Deleting a file is a high-risk operation. Ensure that the file is no longer needed before performing this operation.

Convert an EC file into a replica file. (For example, convert all files in the /src directory into replica files.)

- Run the following command to create a temporary directory (for example, /tmp/convert_tmp_dir, which must be a directory that does not exist) to temporarily store converted data.

hdfs dfs -mkdir /tmp/convert_tmp_dir

- Run the following command to set /tmp/convert_tmp_dir to a replica policy.

hdfs ec -setPolicy -path /tmp/convert_tmp_dir -replicate

- Run the following DistCp command to copy files. In this case, all files are written into the temporary directory based on the replica policy.

hadoop distcp -numListstatusThreads 40 -update -delete -skipcrccheck -pbugpaxt /src /tmp/convert_tmp_dir

- Run the following command to move /src to /src-to-delete.

hdfs dfs -mv /src /src-to-delete

- Run the following command to move /tmp/convert_tmp_dir to /src.

hdfs dfs -mv /tmp/convert_tmp_dir /src

- Run the following command to delete /src-to-delete.

hdfs dfs -rm -r -f /src-to-delete

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot