Configuring Spark Event Log Rollback

Scenario

Event log rolling is a log management technology that splits log files into multiple files by time or size to prevent a single log file from being too large. Rolling log files can improve log management and analysis efficiency, especially when a large amount of log data is processed.

Event logs record various events during the execution of Spark applications, such as task start and completion, and stage submission and completion. If a program, for example JDBCServer or Spark Streaming, runs for a long period of time and has run many jobs and tasks during this period, many events are recorded in the log file, significantly increasing the file size. Enabling event log rolling helps manage the size of log files and prevents problems caused by oversized log files.

When log rollover is enabled, metadata events are written into the log file and job events are written into a new log file (whether a job event is written to the new log file depends on the file size). Metadata events include EnvironmentUpdate, BlockManagerAdded, BlockManagerRemoved, UnpersistRDD, ExecutorAdded, ExecutorRemoved, MetricsUpdate, ApplicationStart, ApplicationEnd, and LogStart. Job events include StageSubmitted, StageCompleted, TaskResubmit, TaskStart, TaskEnd, TaskGettingResult, JobStart, and JobEnd. For Spark SQL applications, job events also include ExecutionStart and ExecutionEnd.

The UI for the HistoryServer service of Spark is obtained by reading and parsing these log files. The memory size is preset before the HistoryServer process starts. Therefore, when the size of log files is large, loading and parsing these files may cause problems such as insufficient memory and driver GC.

To load large log files in small memory mode, you need to enable log rollover for large applications. Generally, it is recommended that this function be enabled for long-running applications.

Configuring Parameters

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark > Configurations, click All Configurations, and search for the following parameters and adjust their values:

Parameter

Description

Example Value

spark.eventLog.rolling.enabled

Whether to enable rollover for event log files. If this parameter is set to true, the size of each event log file is reduced to the configured size.

- true: Spark enables event log rolling and splits log files into multiple files based on the configured policy.

- false: Spark disables event log file rolling and writes all logs to a single file.

true

spark.eventLog.rolling.maxFileSize

Maximum size of the event log file to be rolled over when spark.eventlog.rolling.enabled is set to true.

Value range: no less than 10 MB

128M

spark.eventLog.compression.codec

Codec used to compress event logs. By default, Spark provides four types of codecs: LZ4, LZF, Snappy, and ZSTD. If this parameter is not specified, spark.io.compression.codec is used.

The options are lz4, lzf, snappy, and zstd.

None

spark.eventLog.logStageExecutorMetrics

Whether to write the peak metric of each executor in each stage to the event log.

- true: Spark records the peak metric of each executor in each stage in the event log.

- false: Spark does not record these metrics in the event log.

false

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

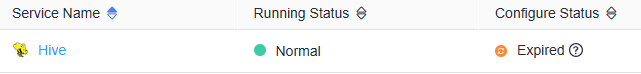

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot