Configuring Vectorized ORC Data Reading

Scenarios

Optimized Row Columnar (ORC) is a columnar storage format in Hadoop, introduced by Apache Hive. It minimizes storage usage and boosts query efficiency in Hive. Similar to Parquet, ORC is not a purely columnar storage format. In ORC, a table is divided into row groups, and within each row group, data is stored column by column. This structure allows for efficient compression, helping to minimize storage space usage.

In Spark 2.3 and later, Spark SQL supports vectorized reading for ORC files (a feature previously available in Hive). This enhancement significantly boosts performance, often delivering several times faster data access compared to traditional data reading.

In an MRS cluster, Spark reads ORC data using vectorized mode by default, ensuring optimal performance out of the box. You can read and write ORC data using either Hive or Spark SQL.

- hive: uses the ORC processing library built in Hive, which maintains compatibility with ORC files generated by earlier versions of Hive.

- native: uses the native ORC processing library of Spark SQL, offering enhanced performance and simplified maintenance.

You can modify Spark configurations on the cluster management page to adjust settings for vectorized ORC data reading.

Notes and Constraints

To use SparkSQL's built-in vectorized mode for reading ORC data, all data must be of type AtomicType. An AtomicType represents everything that is not null, UDTs, arrays, and maps. If a column contains any of the unsupported types mentioned above, expected performance cannot be achieved with Spark SQL's vectorized ORC reading mode.

Parameters

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark, click Configurations and then All Configurations, and search for the following parameters and adjust their values.

Table 1 Parameters for configuring vectorized ORC data reading Parameter

Description

Example Value

spark.sql.orc.enableVectorizedReader

Whether to enable vectorized ORC data reading.

- true: Vectorized ORC data reading is enabled. This is the default value.

- false: Vectorized ORC data reading is disabled.

When ORC files contain deeply nested structures, arrays, or complex mappings, the vectorized reader may fail to process them efficiently. In cases of severe data skew, the vectorized ORC reader may cause disproportionate memory allocation to a single task, potentially leading to out-of-memory (OOM) errors. To mitigate this, disabling vectorized reading is recommended.

true

spark.sql.codegen.wholeStage

Whether to enable Whole-Stage Java Code Generation, an optimization that fuses multiple physical operators (such as filters, maps, and aggregations) into a single, optimized Java function. This fusion significantly improves execution performance of Spark queries.

- true: Whole-Stage Java Code Generation is enabled. This is the default and recommended value.

- false: Whole-Stage Java Code Generation is disabled.

For simple scan or filter operations, the overhead introduced by Whole-Stage Code Generation may outweigh its performance benefits. In such cases, you can consider disabling this function.

true

spark.sql.codegen.maxFields

Maximum number of output fields (including nested fields) that Whole-Stage Code Generation supports. If the number of fields in a table or dataset exceeds the configured threshold, Spark automatically disables Whole-Stage Code Generation and falls back to interpreted execution for that part of the query.

The default value is 100. You may need to adjust the value based on the actual situation for queries involving wide tables or complex nested structures. It is recommended to monitor the execution plan and logs to determine an optimal value. This helps prevent code generation failures or performance degradation when the number of fields exceeds the configured threshold.

When using vectorized ORC data reading in Spark SQL, the value of this parameter must be greater than or equal to the number of columns in the schema.

100

spark.sql.orc.impl

ORC implementation used by Spark for reading ORC data.

- hive: uses the ORC processing library built in Hive, which maintains compatibility with ORC files generated by earlier versions of Hive. This is the default value. It is particularly reliable when Spark needs to process complex ORC files originating from Hive (such as files with special encodings or partitioning formats).

- native: uses Spark's built-in ORC parser. This engine is highly optimized for performance and supports vectorized reading. It is ideal for processing standard ORC files within the Spark ecosystem.

hive

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

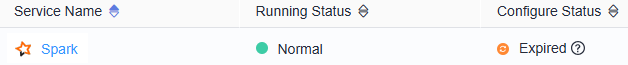

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

If you use the Spark client to submit tasks, after the cluster parameters spark.sql.orc.enableVectorizedReader, spark.sql.codegen.wholeStage, spark.sql.codegen.maxFields, and spark.sql.orc.impl are modified, you need to download the client again for the configuration to take effect. For details, see Using an MRS Client.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot