How Do I Find the Pod That Is Using a GPU or NPU Based on the GPU or NPU Information?

When a GPU or NPU is used in a CCE cluster, it is not possible to directly obtain the pods that use the GPU or NPU. However, you can use the kubectl commands to obtain pods based on the GPU or NPU information. This allows for timely eviction of pods in case of GPU or NPU malfunction.

Prerequisites

- You have created a CCE cluster and configured kubectl for it. For details, see Connecting to a Cluster Using kubectl.

- You have installed CCE AI Suite (NVIDIA GPU) or CCE AI Suite (Ascend NPU) in the cluster. For details, see CCE AI Suite (NVIDIA GPU) and CCE AI Suite (Ascend NPU). The NPU driver version must be later than 23.0.

Procedure

To find the pod that is using a GPU or NPU, obtain the GPU or NPU information on the cluster node and use kubectl to search for the corresponding pod.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Nodes. In the right pane, click the Nodes tab and view the IP address of the GPU node. The following uses 192.168.0.106 as an example.

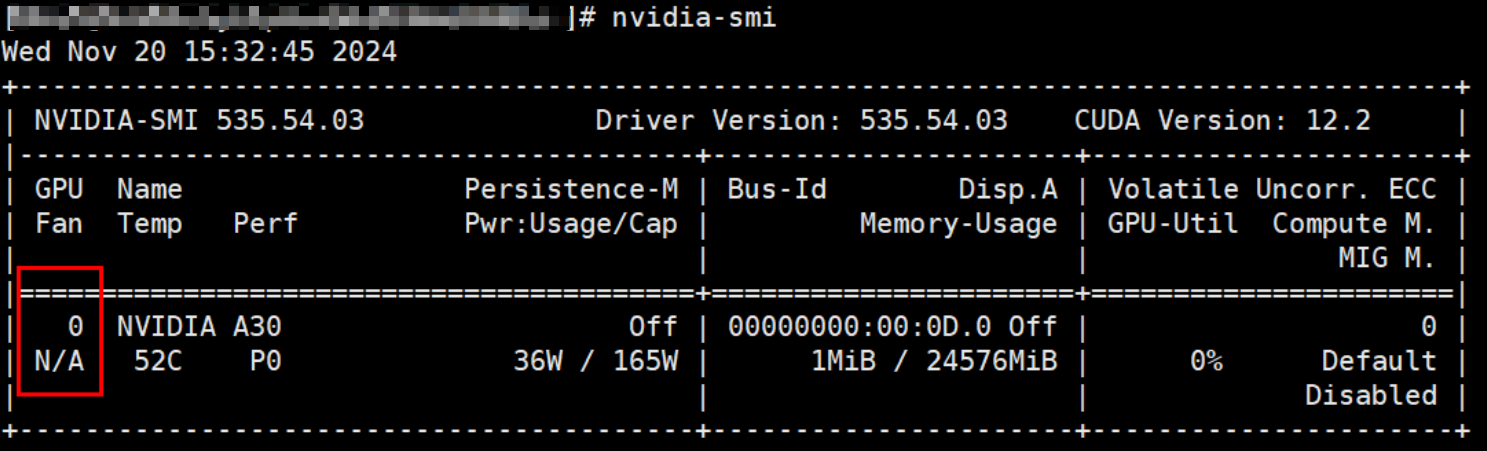

- Log in to the GPU node and view the GPU information:

nvidia-smi

The command output shows that GPU0 is present. The GPU0 is used as an example to describe how to locate the pod that uses this GPU.

- Find the pod that uses the GPU based on the node IP address (192.168.0.106) and device ID (GPU 0).

kubectl get pods --all-namespaces -o jsonpath='{range .items[?(@.spec.nodeName=="192.168.0.106")]}{.metadata.namespace}{"\t"}{.metadata.name}{"\t"}{.metadata.annotations}{"\n"}{end}' | grep nvidia0 | awk '{print $1, $2}'This command looks for all pods on the node with the IP address 192.168.0.106 and the pod whose annotation includes nvidia0 (GPU 0). It displays the namespace and pod name of that specific pod.

Based on the information provided, it can be inferred that the pod named k8-job-rhblr in the default namespace is using GPU 0 on the node with the IP address 192.168.0.106.

- Log in to the CCE console and click the cluster name to access the cluster console. In the navigation pane, choose Nodes. In the right pane, click the Nodes tab and view the IP address of the NPU node. The following uses 192.168.0.138 as an example.

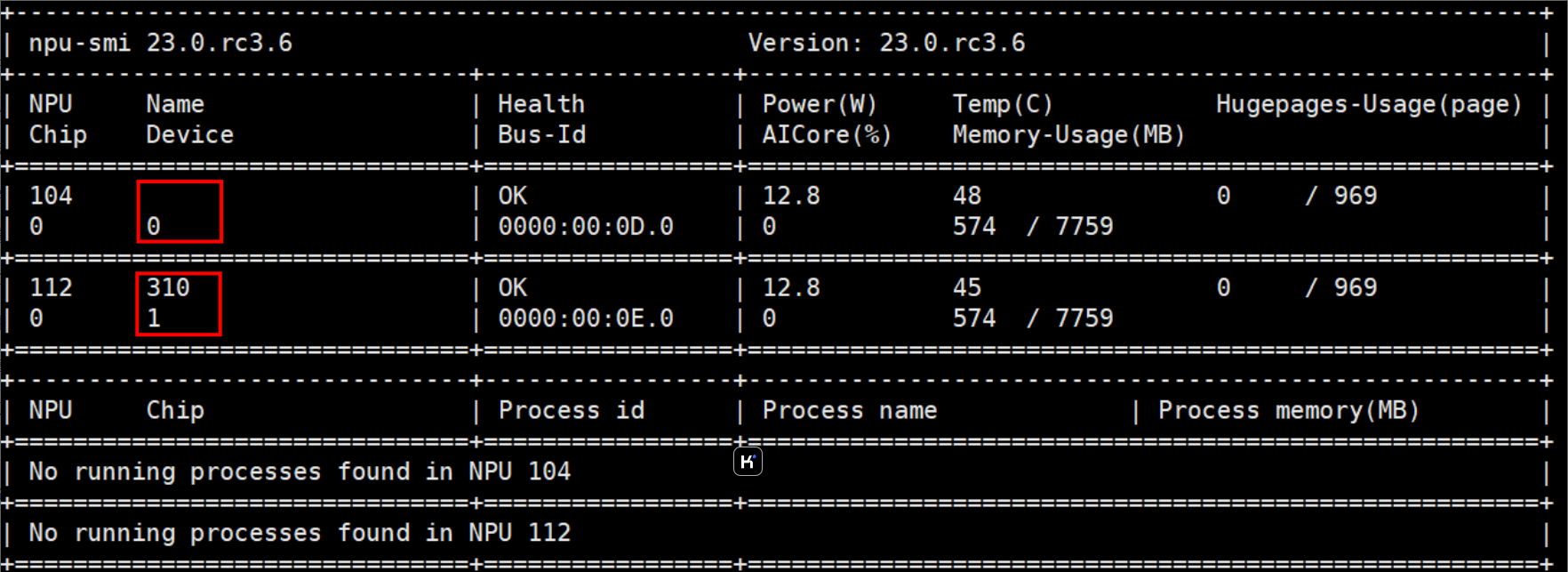

- Log in to the NPU node and view the NPU information:

npu-smi info

The command output shows that device0 and device1 are present. The device0 is used as an example to describe how to locate the pod that uses this NPU.

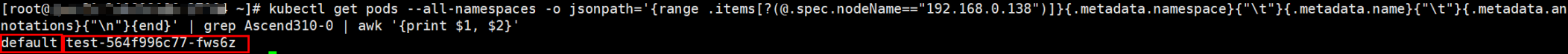

- Find the pod that uses the NPU based on the node IP address (192.168.0.138) and device ID (NPU 0).

kubectl get pods --all-namespaces -o jsonpath='{range .items[?(@.spec.nodeName=="192.168.0.138")]}{.metadata.namespace}{"\t"}{.metadata.name}{"\t"}{.metadata.annotations}{"\n"}{end}' | grep Ascend310-0 | awk '{print $1, $2}'This command looks for all pods on the node with the IP address 192.168.0.138 and the pod whose annotation includes Ascend310-0 (NPU 0). It displays the namespace and pod name of that specific pod.

Based on the information provided, it can be inferred that the pod named test-564f996c77-fws6z in the default namespace is using NPU 0 on the node with the IP address 192.168.0.138.

- If other NPUs are being used, simply replace the name Ascend 310 in Ascend 310-0 with the appropriate name of the corresponding NPU.

- The NPU driver version must be 23.0 or later.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot