Optimizing the Parameters of a Job for Migrating Data from Apache Kafka to MRS Kafka

Optimizing Source Parameters

Optimization of data extraction from Kafka

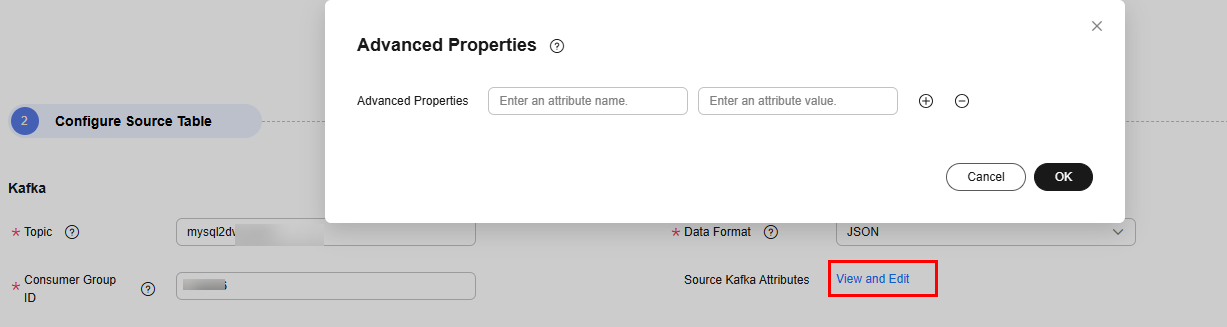

You can click Source Kafka Attributes in the source configuration to add Kafka optimization configurations.

The following tuning parameters are available.

|

Parameter |

Type |

Default Value |

Description |

|---|---|---|---|

|

properties.fetch.max.bytes |

int |

57671680 |

Maximum number of bytes returned for each fetch request when Kafka data is consumed. If the size of a single Kafka message is large, you can increase the amount of data obtained each time to improve performance. |

|

properties.max.partition.fetch.bytes |

int |

1048576 |

Maximum number of bytes in each partition returned by the server when Kafka data is consumed. If the size of a single Kafka message is large, you can increase the amount of data obtained each time to improve performance. |

|

properties.max.poll.records |

int |

500 |

Maximum number of messages returned by a consumer in each poll. If the size of a single Kafka message is large, you can increase the amount of data obtained each time to improve performance. |

Optimizing Destination Parameters

Optimization of data writing to Kafka

Generally, data is written to Kafka fast. If the speed is slow, increase the concurrency.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.