Optimizing the Parameters of a Job for Migrating Data from MySQL to GaussDB(DWS)

Optimizing Source Parameters

Optimization of data extraction from MySQL

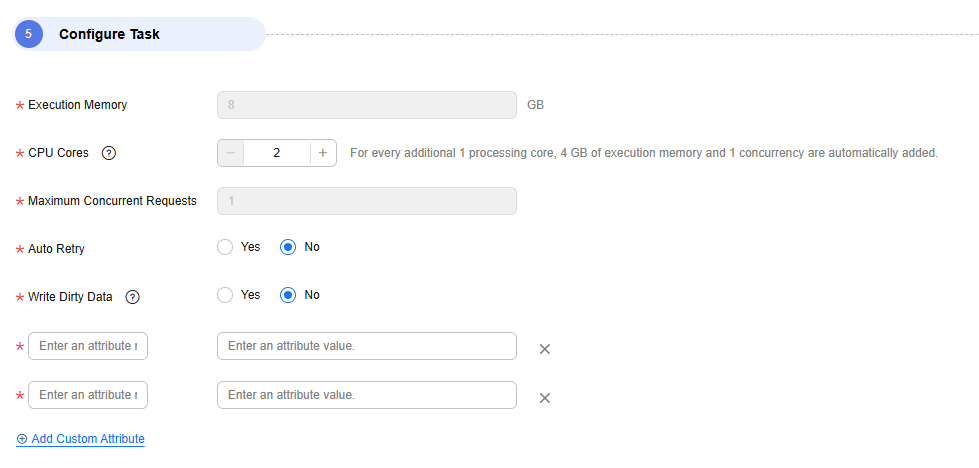

You can click Add Custom Attribute in the Configure Task area and add MySQL synchronization parameters.

The following tuning parameters are available.

|

Parameter |

Type |

Default Value |

Description |

|---|---|---|---|

|

scan.incremental.snapshot.backfill.skip |

boolean |

true |

Whether to skip reading Binlog data. The default value is true. Skipping reading Binlog data can effectively reduce memory usage. Note that skipping reading Binlog data provides only at-least-once guarantee. |

|

scan.incremental.snapshot.chunk.size |

int |

50000 |

Shard size, which determines the maximum number of data records in a single shard and the number of shards in the full migration phase. The larger the shard size, the more data records in a single shard, and the smaller the number of shards. If a table has a large number of records, the job will be divided into multiple shards, occupying too much memory. To avoid this issue, reduce the number of records in the table. If scan.incremental.snapshot.backfill.skip is false, the real-time processing migration job caches data of a single shard. In this case, a larger shard occupies more memory, causing memory overflow. To avoid this issue, reduce the shard size. |

|

scan.snapshot.fetch.size |

int |

1024 |

Maximum number of data records that can be extracted from the MySQL database in a single request during full data extraction. Increasing the number of requests can reduce the number of requests to the MySQL database and improve performance. |

|

debezium.max.queue.size |

int |

8192 |

Number of data cache queues. The default value is 8192. If the size of a single data record in the source table is too large (for example, 1 MB), memory overflow occurs when too much data is cached. You can reduce the value. |

|

debezium.max.queue.size.in.bytes |

int |

0 |

Size of the data cache queue. The default value is 0, indicating that the cache queue is calculated based on the number of data records instead of the data size. If debezium.max.queue.size cannot effectively limit memory usage, you can explicitly set this parameter to limit the size of cached data. |

|

jdbc.properties.socketTimeout |

int |

300000 |

Timeout interval of the socket for connecting to the MySQL database in the full migration phase. The default value is 5 minutes. If the MySQL database is overloaded, and the SocketTimeout exception occurs for a job, you can increase the value of this parameter. |

|

jdbc.properties.connectTimeout |

int |

60000 |

Timeout interval of the connection to the MySQL database in the full migration phase. The default value is 1 minute. If the MySQL database is overloaded, and the ConnectTimeout exception occurs for a job, you can increase the value of this parameter. |

|

Parameter |

Type |

Default Value |

Description |

|---|---|---|---|

|

debezium.max.queue.size |

int |

8192 |

Number of data cache queues. The default value is 8192. If the size of a single data record in the source table is too large (for example, 1 MB), memory overflow occurs when too much data is cached. You can reduce the value. |

|

debezium.max.queue.size.in.bytes |

int |

0 |

Size of the data cache queue. The default value is 0, indicating that the cache queue is calculated based on the number of data records instead of the data size. If debezium.max.queue.size cannot effectively limit memory usage, you can explicitly set this parameter to limit the size of cached data. |

Optimizing Destination Parameters

Optimization of data writing to GaussDB(DWS)

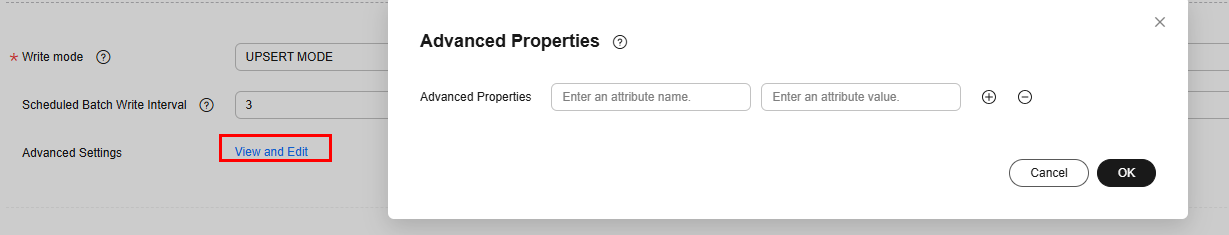

You can modify writing parameters in the GaussDB(DWS) destination configuration or click View and Edit in the advanced configuration to add advanced attributes.

|

Parameter |

Type |

Default Value |

Description |

|---|---|---|---|

|

Write Mode |

enum |

UPSERT |

Mode for writing data to GaussDB(DWS), which can be set in the destination configuration. COPY MODE is recommended for real-time migration jobs.

|

|

Maximum Data Volume for Batch Write |

int |

50000 |

Maximum number of data records that can be written to GaussDB(DWS) at a time. You can set this parameter in the destination configuration. If Maximum Data Volume for Batch Write or Scheduled Batch Write Interval is met, data will be written. Increasing the number of data records written at a time can reduce the number of DWS requests but may increase the duration of a single request and the amount of cached data, which affects memory usage. Adjust the value based on the GaussDB(DWS) specifications and load. |

|

Scheduled Batch Write Interval |

int |

3 |

Interval for writing data to GaussDB(DWS). You can set this parameter in the destination configuration. If the interval is reached, cached data will be written. Increasing the value of this parameter increases the number of data records cached in a single write, but it takes a longer time for DWS data to become visible. |

|

sink.buffer-flush.max-size |

int |

512 |

Amount of the data that can be written to GaussDB(DWS) at a time. The default value is 512 MB. You can set this parameter in the advanced settings of the destination configuration. If the size of cached data reaches the upper limit, data will be written. Similar to Maximum Data Volume for Batch Write, increasing the amount of data written at a time can reduce the number of DWS requests but may increase the duration of a single request and the amount of cached data, which affects memory usage. Adjust the value based on the DWS specifications and load. |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.