Step 1: Design a Process

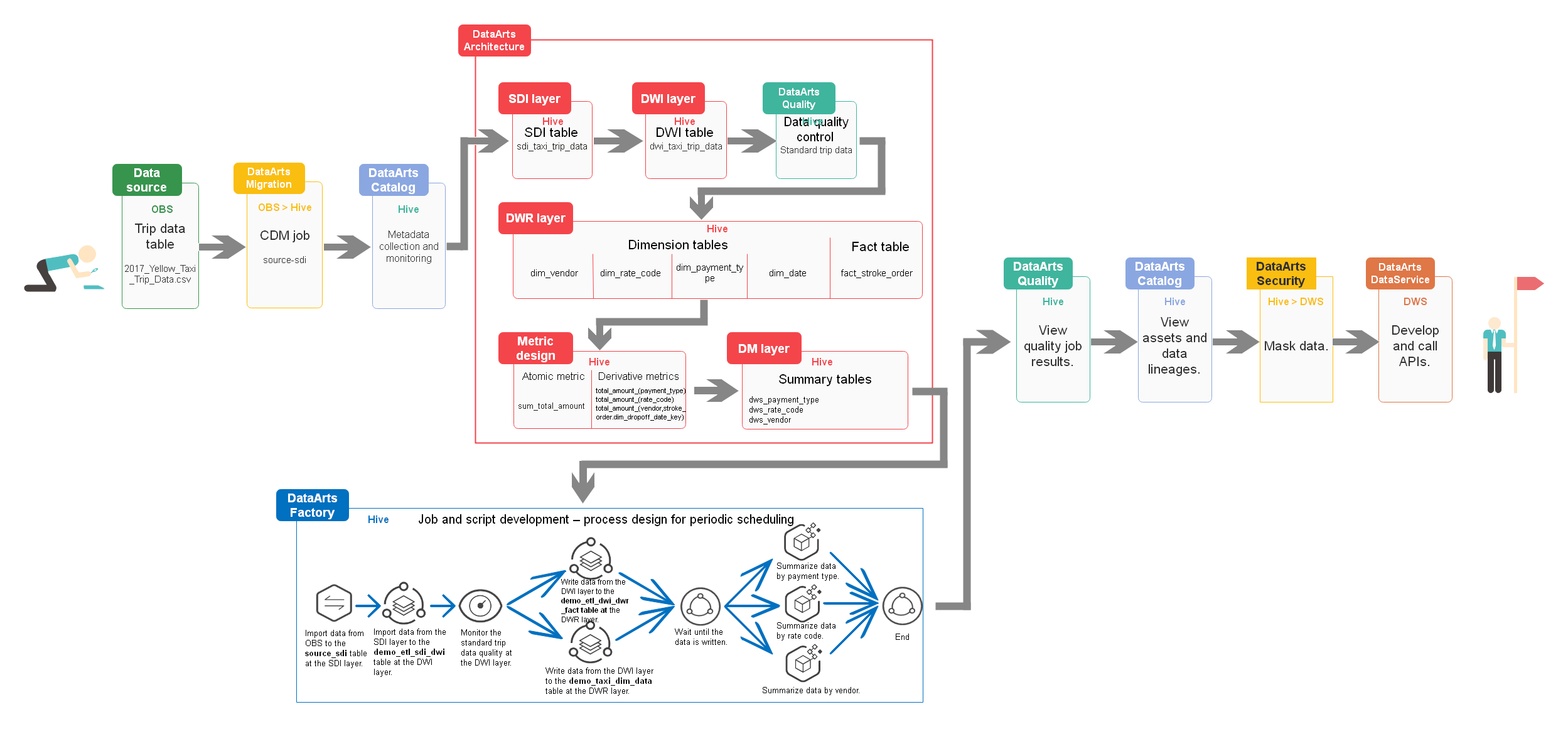

This guide uses the collection of operations statistics from a taxi vendor in 2017 as an example. Figure 1 shows the data governance process which is based on requirement analysis and service survey.

Requirement Analysis

Requirement analysis helps you develop a data governance framework to support the process design for data governance.

- No standardized model is available.

- There is no standard for data field naming.

- Data content is not standard, and data quality is uncontrollable.

- Statistics standards are inconsistent, hindering business decision-making.

- Standardized data and models

- Unified statistics standards and high-quality data reports

- Data quality monitoring and alarm

- Daily revenue statistics

- Monthly revenue statistics

- Statistics on the revenue proportion of each payment type

Service Survey

Before using DataArts Studio, conduct a service survey to understand the component functions required in the service process and analyze the subsequent service load.

|

No. |

Configuration Item |

Information to Be Collected |

Survey Result |

Remarks |

|---|---|---|---|---|

|

1 |

Workspace |

Organizations and relationships between the enterprise's big data departments |

N/A |

Properly plan workspaces to reduce the complexity of workspace dependency |

|

Access control permissions on data and resources between departments |

N/A |

User permissions and resource permissions control are involved. |

||

|

2 |

DataArts Migration |

Data source from which the data is to be migrated and the data source version |

CSV source data files in the OBS bucket |

N/A |

|

Full data volume of each data source |

2,114 bytes |

N/A |

||

|

Daily incremental data volume of each data source |

N/A |

N/A |

||

|

Types and versions of data sources at the destination |

MRS Hive 3.1 |

N/A |

||

|

Data migration period: day, hour, minute, or real-time |

Day |

N/A |

||

|

Network bandwidth between data sources at the source and destination |

100 MB |

N/A |

||

|

Description of the network connectivity between the data sources and integration tools |

N/A |

N/A |

||

|

Database migration: number of survey tables and maximum table size |

N/A. In this example, data needs to be migrated from OBS to the database. |

Understand the scale of database migration and whether the migration duration of the largest table is acceptable. |

||

|

File migration: number of files, and whether the size of any file reaches 1 TB |

A CSV file smaller than 1 TB |

N/A |

||

|

3 |

DataArts Factory |

Whether job orchestration and scheduling are required |

Yes |

N/A |

|

Services required in orchestration and scheduling, such as MRS, GaussDB(DWS), and CDM |

DataArts Migration and DataArts Quality of DataArts Studio, and MRS Hive |

Understand application scenarios of jobs to further investigate the suitability of platform capabilities for customer scenarios. |

||

|

Number of jobs |

Less than 20 |

Understand the job scale. Generally, the job scale is described by the number of operators and can be estimated based on the number of tables. |

||

|

Number of times a job is scheduled |

Unlimited |

Determine the DataArts Studio edition based on the scheduling quota of each DataArts Studio sales edition. |

||

|

Number of data developers |

1 |

N/A |

||

|

4 |

DataArts Architecture |

Data sources and number of tables |

Only one CSV file |

Analyze source data to understand the data source and overall situation. |

|

Services, requirements, and benefits |

Standardize data and models and collect statistics on revenue in a flexible manner. |

Analyze the destination to understand the purposes of data governance and digitalization. |

||

|

Data survey, data overview, data standards degree, and industry standards overview |

N/A |

Analyze the process to understand the standards and quality compliance in the data governance process. |

||

|

5 |

DataArts Quality |

Requirements and benefits |

Data quality monitoring |

Monitor more data sources and rules. |

|

Number of jobs |

1 |

You can manually create dozens of jobs or enable the function of automatically generating data quality jobs on DataArts Architecture. If the API for creating data quality jobs is called, more than 100 quality jobs can be created. |

||

|

Application scenarios |

Standardize and cleanse data at the DWI layer. |

Generally, before and after data processing, the data quality is monitored from six dimensions. If any data that does not comply with rules is detected, users will receive an alarm notification. |

||

|

6 |

DataArts Catalog |

Data sources to support |

MRS Hive |

N/A |

|

Data volume |

A table contains fewer than 100 records. |

A maximum of 1 million tables can be managed. |

||

|

Scheduling frequency of metadata collection |

N/A |

Collection tasks can be executed by hour, day, or week. |

||

|

Key metrics of metadata collection |

N/A |

The key metrics include the table name, field name, owner, description, and creation time. |

||

|

Application scenarios of tags |

N/A |

Tags are highly related keywords that help you classify and describe assets to facilitate search. |

||

|

7 |

DataArts Security |

Data sources to which access is controlled |

N/A |

Data sources such as GaussDB(DWS), DLI, and Hive are supported. |

|

Whether static masking is supported |

N/A |

Static masking is supported for GaussDB(DWS), DLI, and Hive data. |

||

|

Whether dynamic masking is supported |

N/A |

Dynamic masking is supported for GaussDB(DWS) and Hive data. |

||

|

Whether data watermarking is supported |

N/A |

Watermark embedding is supported for Hive data. |

||

|

Whether file watermarks are supported |

N/A |

Invisible watermarks can be injected into structured data files, and visible watermarks can be injected into unstructured data files. |

||

|

Whether dynamic watermarking is supported |

N/A |

Dynamic watermark policies can be configured for Hive and Spark data. |

||

|

8 |

DataArts DataService |

Open data sources |

N/A |

Data sources such as GaussDB(DWS), DLI, and MySQL are supported. |

|

Daily data calls |

N/A |

If the database response takes a long period of time due to complex extraction logic, the data calling volume will decrease. |

||

|

Number of peak data calls per second |

N/A |

The number of peak data calls per second varies depending on the edition in use and data extraction logic. |

||

|

Average latency of a single data call |

N/A |

The database response duration is related to the data extraction logic. |

||

|

Whether data access records are required |

N/A |

N/A |

||

|

Data access method: intranet or Internet |

N/A |

N/A |

||

|

Number of DataArts DataService developers |

N/A |

N/A |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.