Function

-

DevEnviron

-

During AI development, it is challenging to set up a development environment, select an AI framework and algorithm, debug code, install software, or accelerate hardware. To resolve these issues, ModelArts offers notebook for simplified development.

Both old and new versions of ModelArts notebook are available. Compared with the old version, the new version offers more optimized functions. Use the new version. The following describes the functions of the new-version notebook.

Released in: EU-Dublin

-

JupyterLab

-

The in-cloud Jupyter notebook provided by ModelArts enables online interactive development and debugging. It can be used out of the box, relieving you of installation and configuration.

-

-

-

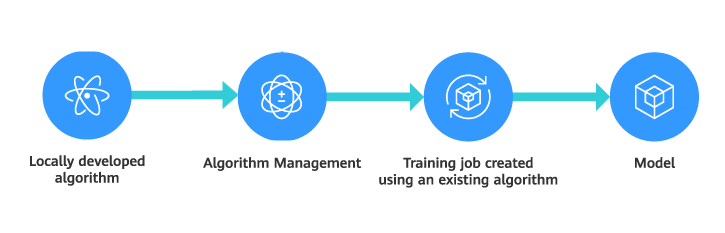

Algorithm Management

-

You can upload locally developed algorithms or algorithms developed using other tools to ModelArts for unified management. You can use the algorithm you have created or subscribed to quickly create a training job on ModelArts and obtain the desired model.

Released in: EU-Dublin

-

-

Training Management

-

All the algorithms developed locally or using other tools can be uploaded to ModelArts for unified management.

You can use the algorithms either you have created or you have subscribed to to quickly create training jobs on ModelArts.

Released in: EU-Dublin

-

Using a Custom Algorithm to Develop Models

-

If the algorithms that are available for subscription cannot meet service requirements or you want to migrate local algorithms to the cloud for training, use the training engines built into ModelArts to create algorithms. This process is also known as using a custom script to create algorithms.

Almost all mainstream AI engines have been built into in ModelArts. These built-in engines are pre-loaded with some additional Python packages, such as NumPy. You can also use requirements.txt in the code directory to install dependency packages.

-

-

Using a Custom Image to Develop Models

-

-

The built-in training engines and the algorithms that can be subscribed to apply to most training scenarios. In certain scenarios, ModelArts allows you to create custom images to train models. Custom images can be used in the cloud only after they are uploaded to the Software Repository for Container (SWR).

Customizing an image requires a deep understanding of containers. Use this method only if the algorithms that are available to subscribe to and the built-in training engines cannot meet your requirements.

-

-

-

-

Model Management

-

ModelArts allows you to deploy models as AI applications and centrally manages these applications. The models can be locally deployed or obtained from training jobs.

ModelArts also enables you to convert models and deploy them on different devices, such as Arm devices.

Released in: EU-Dublin

-

Importing a Meta Model from OBS Through Manual Configurations

-

In scenarios where frequently-used frameworks are used for model development and training, you can import the model to ModelArts and use it to create an AI application for unified management.

-

-

Importing Models from Custom Images

-

For an AI engine that is not supported by ModelArts, build a model for the AI engine, custom an image for the model, import the model to ModelArts, and deploy the model as AI applications.

-

-

Model Package Specifications

-

When you create an AI application in AI Application Management, if the meta model is imported from OBS or a container image, ensure the model package complies with specifications.

Edit the inference code and configuration file for subsequent service deployment.

Note: A model trained using a built-in algorithm has had the inference code and configuration file configured. You do not need to configure them separately.

-

-

-

Service Deployment

-

AI model deployment and large-scale service application are complex. To simplify these operations, ModelArts provides you with one-stop deployment that allows you to deploy trained models on devices, edge devices, and the cloud with just one click.

-

Real-Time Services

-

Real-time inference services feature high concurrency, low latency, and elastic scaling, and support multi-model gray release and A/B testing. You can deploy a model as a web service to provide real-time test UI and monitoring capabilities.

Released in: EU-Dublin

-

-

Batch Services

-

Batch services are suitable for processing a large amount of data and distributed computing. You can use a batch service to perform inference on data in batches. The batch service automatically stops after data processing is complete.

Released in: EU-Dublin

-

-

-

Resource Pools

-

When you use ModelArts for AI development, you may need some compute resources for training or inference. ModelArts provides pay-per-use public resource pools and queue-free dedicated resource pools to meet diverse service requirements.

-

Public Resource Pools

-

Public resource pools provide large-scale public computing clusters, which are allocated based on job parameter settings. Resources are isolated by job. Public resource pools are billed based on resource flavors, service duration, and the number of instances, regardless of tasks (training, deployment, or development) for which the pools are used. Public resource pools are available by default. You can select a public resource pool during AI development.

-

-

Dedicated Resource Pools

-

Dedicated resource pools provide dedicated compute resources, which can be used for notebook instances, training jobs, and model deployment. You do not need to queue for resources when using a dedicated resource pool, resulting in greater efficiency. To use a dedicated resource pool, buy one and select it during AI development.

Released in: EU-Dublin

-

-

-

ModelArts SDKs

-

ModelArts Software Development Kits (ModelArts SDKs) encapsulate ModelArts REST APIs in Python language to simplify application development. You can directly call ModelArts SDKs to easily start AI training, generate models, and deploy the models as real-time services.

In notebook instances, you can use ModelArts SDKs to manage OBS data, training jobs, models, and real-time services without authentification.

Released in: EU-Dublin

-

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.