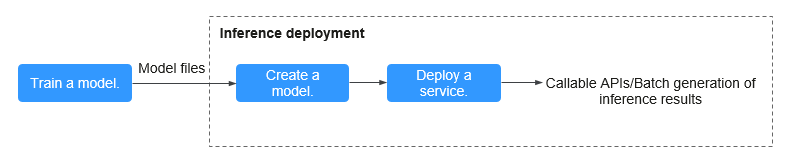

Overview

You can import and deploy AI models as inference services. These services can be integrated into your IT platform by calling APIs or generate batch results.

- Train a model: Models can be trained in ModelArts or your local development environment. A locally developed model must be uploaded to OBS.

- Create a model: Import the model file and inference file to the ModelArts model repository and manage them by version. Use these files to build an executable model.

- Deploy a service: Deploy the model as a service type based on your needs.

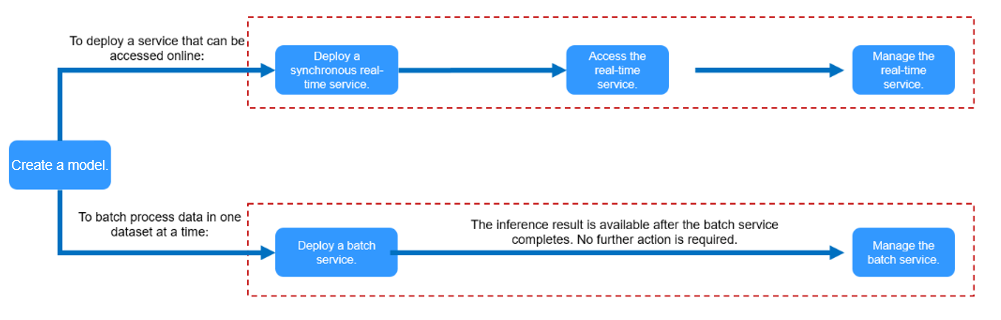

- Deploying a Model as Real-Time Inference Jobs

Deploy a model as a web service with real-time UI and monitoring. This service provides you a callable API.

- Deploying a Model as a Batch Inference Service

Deploy an AI application as a batch service that performs inference on batch data and automatically stops after data processing is complete.

Figure 2 Different inference scenarios

- Deploying a Model as Real-Time Inference Jobs

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.