Why Does an Exception Occur When I Drop Functions Created Using the Add Jar Statement?

Question

- Question 1

Why can I successfully drop functions without the drop function permission? The specific operations are as follows:

- On FusionInsight Manager, I added user user1 and granted the user the admin permission.

set role admin;add jar /home/smartcare-udf-0.0.1-SNAPSHOT.jar;create database db4;use db4;create function f11 as 'com.huawei.smartcare.dac.hive.udf.UDFArrayGreaterEqual';create function f12 as 'com.huawei.smartcare.dac.hive.udf.UDFArrayGreaterEqual';

- Then I canceled the admin permission:

drop functiondb4.f11;

The result shows that the drop operation is successful.

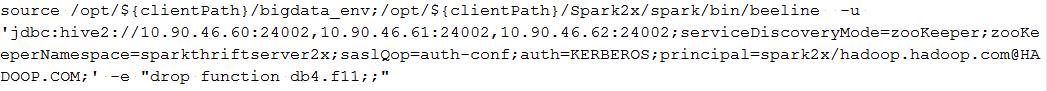

Figure 1 Command output of the drop function command

- On FusionInsight Manager, I added user user1 and granted the user the admin permission.

- Question 2

After the preceding operation, I ran the show function command and the command output indicates that the function still exits. The specific operations are as follows:

- On FusionInsight Manager, I added user user1 and granted the user the admin permission. Then I ran the following commands in spark-beeline:

set role admin;create database db2;use db2;add jar /home/smartcare-udf-0.0.1-SNAPSHOT.jar;create function f11 as 'com.huawei.smartcare.dac.hive.udf.UDFArrayGreaterEqual';create function f12 as 'com.huawei.smartcare.dac.hive.udf.UDFArrayGreaterEqual';

- I exited and re-logged in to spark-beeline and ran the following command:

set role admin;use db2;drop function db2.f11;

- I exited and re-logged in to spark-beeline and ran the following command:

use db2;show functions;

The result shows that the dropped function still exists.

Figure 2 Output of the show functions command

- On FusionInsight Manager, I added user user1 and granted the user the admin permission. Then I ran the following commands in spark-beeline:

Answer

- Root cause:

The root cause is that, in multi-active instance or multi-tenant mode, the function created using the add jar command in spark-beeline is invisible to JDBCServer instances. When you run the drop function command, if the JDBCServer instance connected by the session is not the JDBCServer instance that creates the function, the function cannot be found in the session. In addition, the default value of hive.exec.drop.ignorenonexistent in Hive is true. Therefore, if the function does not exist, no exception is reported when the function deletion operation is performed. As a result, no exception is reported when you delete a non-existing function and perform drop operation even though you do not have the drop function permission. When you run the show function command after restarting the session and connecting to the JDBCServer instance that creates the function, the command output indicates that the function still exists. This problem is inherited from the Hive community.

- Solution:

Before you run the drop function command, run the add jar command. In this way, the drop operation succeeds only when you are granted the drop function permission, and the dropped function will not be displayed if you run the show function command.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.