Oozie Client Configurations

Scenario

This section describes how to use the Oozie client in an O&M scenario or service scenario. Oozie can submit multiple types of tasks, such as Hive, Spark2x, Loader, MapReduce, Java, DistCp, Shell, HDFS, SSH, SubWorkflow, Streaming, and scheduled tasks.

Prerequisites

- The client has been installed in a directory, for example, /opt/client. The client directory in the following operations is only an example. Change it based on site requirements.

- Service component users have been created by the MRS cluster administrator. In security mode, machine-machine users need to download the keytab file. A human-machine user must change the password upon the first login.

Using the Oozie Client

- Log in to the node where the client is installed as the client installation user.

- Run the following command to switch to the client installation directory (change it to the actual installation directory):

cd /opt/client

- Run the following command to configure environment variables:

source bigdata_env

- Check the cluster authentication mode.

- If the cluster is in security mode, run the following command to authenticate the user: exampleUser indicates the name of the user who submits tasks.

kinit exampleUser

- If the cluster is in normal mode, go to 5.

- If the cluster is in security mode, run the following command to authenticate the user: exampleUser indicates the name of the user who submits tasks.

- Perform the following operations to configure Hue:

- Configure the Spark2x environment (skip this step if the Spark2x task is not involved):

hdfs dfs -put /opt/client/Spark2x/spark/jars/*.jar /user/oozie/share/lib/spark2x/

When the JAR package in the HDFS directory /user/oozie/share changes, you need to restart the Oozie service.

- Upload the Oozie configuration file and JAR package to HDFS.

hdfs dfs -mkdir /user/exampleUser

hdfs dfs -put -f /opt/client/Oozie/oozie-client-*/examples /user/exampleUser/

- exampleUser indicates the name of the user who submits tasks.

- If the user who submits the task and other files except job.properties are not changed, client installation directory Oozie/oozie-client-*/examples can be repeatedly used after being uploaded to HDFS.

- Resolve the JAR file conflict between Spark and Yarn about Jetty.

hdfs dfs -rm -f /user/oozie/share/lib/spark/jetty-all-9.2.22.v20170606.jar

- In normal mode, if Permission denied is displayed during the upload, run the following commands:

su - omm

source /opt/client/bigdata_env

hdfs dfs -chmod -R 777 /user/oozie

exit

- Configure the Spark2x environment (skip this step if the Spark2x task is not involved):

- The following describes how to submit a MapReduce job on the Oozie client.

- Run the following commands to modify the job execution configuration file:

cd /opt/client/Oozie/oozie-client-*/examples/apps/map-reduce/

vi job.properties

nameNode=hdfs://hacluster resourceManager=10.64.35.161:8032 (10.64.35.161 is the service plane IP address of the Yarn resourceManager (active) node, and 8032 is the port number of yarn.resourcemanager.port) queueName=default examplesRoot=examples user.name=admin oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/apps/map-reduce # HDFS upload path outputDir=map-reduce oozie.wf.rerun.failnodes=true - Run the following command to execute the Oozie job:

oozie job -oozie https://Host name of the Oozie role:21003/oozie/ -config job.properties -run

21003 indicates the running port of Oozie HTTPS requests. You can log in to FusionInsight Manager, choose Cluster > Services > Oozie > Configurations, and search for OOZIE_HTTPS_PORT in the search box.

[root@kwephispra44947 map-reduce]# oozie job -oozie https://kwephispra44948:21003/oozie/ -config job.properties -run ...... job: 0000000-200730163829770-oozie-omm-W

- Log in to FusionInsight Manager. For details, see Accessing FusionInsight Manager.

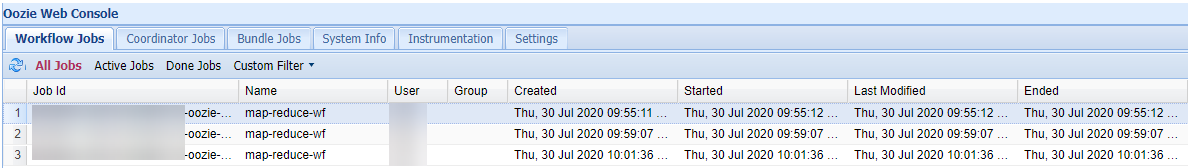

- Choose Cluster > Name of the desired cluster > Services > Oozie, click the hyperlink next to Oozie WebUI to go to the Oozie page, and view the task execution result on the Oozie web UI.

Figure 1 Task execution result

- Run the following commands to modify the job execution configuration file:

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.