How Can I Obtain GPU Usage Through Code?

Run the shell or python command to obtain the GPU usage.

Using the shell Command

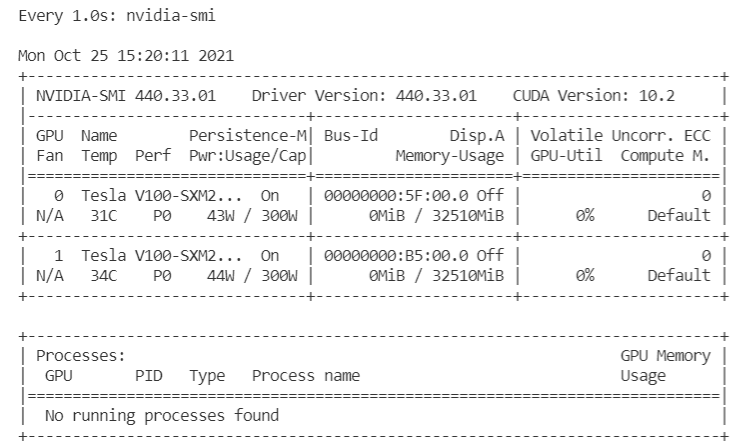

- Run the nvidia-smi command.

This operation relies on CUDA NVCC.

watch -n 1 nvidia-smi

- Run the gpustat command.

pip install gpustat

gpustat -cp -i

To stop the command execution, press Ctrl+C.

Using the python Command

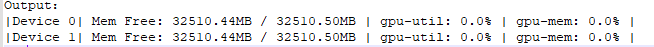

- Run the nvidia-ml-py3 command (commonly used).

!pip install nvidia-ml-py3

import nvidia_smi nvidia_smi.nvmlInit() deviceCount = nvidia_smi.nvmlDeviceGetCount() for i in range(deviceCount): handle = nvidia_smi.nvmlDeviceGetHandleByIndex(i) util = nvidia_smi.nvmlDeviceGetUtilizationRates(handle) mem = nvidia_smi.nvmlDeviceGetMemoryInfo(handle) print(f"|Device {i}| Mem Free: {mem.free/1024**2:5.2f}MB / {mem.total/1024**2:5.2f}MB | gpu-util: {util.gpu:3.1%} | gpu-mem: {util.memory:3.1%} |")

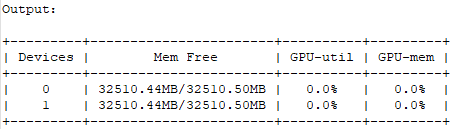

- Run the nvidia_smi, wapper, and prettytable commands.

Use the decorator to obtain the GPU usage in real time during model training.

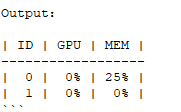

def gputil_decorator(func): def wrapper(*args, **kwargs): import nvidia_smi import prettytable as pt try: table = pt.PrettyTable(['Devices','Mem Free','GPU-util','GPU-mem']) nvidia_smi.nvmlInit() deviceCount = nvidia_smi.nvmlDeviceGetCount() for i in range(deviceCount): handle = nvidia_smi.nvmlDeviceGetHandleByIndex(i) res = nvidia_smi.nvmlDeviceGetUtilizationRates(handle) mem = nvidia_smi.nvmlDeviceGetMemoryInfo(handle) table.add_row([i, f"{mem.free/1024**2:5.2f}MB/{mem.total/1024**2:5.2f}MB", f"{res.gpu:3.1%}", f"{res.memory:3.1%}"]) except nvidia_smi.NVMLError as error: print(error) print(table) return func(*args, **kwargs) return wrapper

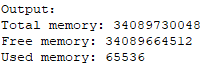

- Run the pynvml command.

Run nvidia-ml-py3 to directly obtain the nvml c-lib library, without using nvidia-smi. Therefore, this command is recommended.

from pynvml import * nvmlInit() handle = nvmlDeviceGetHandleByIndex(0) info = nvmlDeviceGetMemoryInfo(handle) print("Total memory:", info.total) print("Free memory:", info.free) print("Used memory:", info.used)

- Run the gputil command.

!pip install gputil

import GPUtil as GPU GPU.showUtilization()

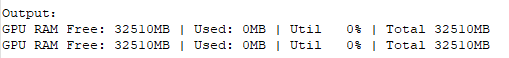

import GPUtil as GPU GPUs = GPU.getGPUs() for gpu in GPUs: print("GPU RAM Free: {0:.0f}MB | Used: {1:.0f}MB | Util {2:3.0f}% | Total {3:.0f}MB".format(gpu.memoryFree, gpu.memoryUsed, gpu.memoryUtil*100, gpu.memoryTotal))

When using a deep learning framework such as PyTorch or TensorFlow, you can also use the APIs provided by the framework for query.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.