Using the Flink Client

This section describes how to use Flink to run wordcount jobs.

Prerequisites

- Flink has been installed in an MRS cluster.

- The cluster runs properly and the client has been correctly installed, for example, in the /opt/hadoopclient directory. The client directory in the following operations is only an example. Change it to the actual installation directory.

Procedure

- Install a client.

The following uses installing a Flink client on a node in the cluster as an example:

- Log in to FusionInsight Manager, choose Cluster, click the name of the desired cluster, click More, and select Download Client.

- In the Download Cluster Client dialog box that is displayed, select Complete Client for Select Client Type, select the platform type that matches the architecture of the node where the client is to install, select Save to Path, and click OK.

- The generated file is stored in the /tmp/FusionInsight-Client directory on the active management node by default.

- The name of the client software package is in the following format: FusionInsight_Cluster_<Cluster ID>_Services_Client.tar. In this section, the cluster ID 1 is used as an example. Replace it with the actual cluster ID.

- Log in to the server where the client is to be installed as the client installation user.

- Go to the directory where the installation package is stored and run the following commands to decompress the package:

tar -xvf FusionInsight_Cluster_1_Services_Client.tar

- Run the following command to verify the decompressed file and check whether the command output is consistent with the information in the sha256 file:

sha256sum -c FusionInsight_Cluster_1_Services_ClientConfig.tar.sha256

FusionInsight_Cluster_1_Services_ClientConfig.tar: OK

- Decompress the obtained installation file.

- Go to the directory where the installation package is stored, and run the following command to install the client to a specified directory (absolute path), for example, /opt/hadoopclient:

cd /tmp/FusionInsight-Client/FusionInsight_Cluster_1_Services_ClientConfig

./install.sh /opt/hadoopclient

The client is installed if information similar to the following is displayed:

The component client is installed successfully

- Log in to the node where the client is installed as the client installation user.

- Run the following command to go to the client installation directory:

cd /opt/hadoopclient

- Run the following command to initialize environment variables:

source /opt/hadoopclient/bigdata_env

- Perform the following operations if Kerberos authentication is enabled for the cluster. Otherwise, skip these operations.

- Prepare a user for submitting Flink jobs.

Log in to FusionInsight Manager and choose System > Permission > Role. Click Create Role and configure Role Name and Description. In Configure Resource Permission, choose Name of the desired cluster > Flink and select FlinkServer Admin Privilege. Then click OK.

Choose System > Permission > User and click Create User. Configure Username, set User Type to Human-Machine, configure Password and Confirm Password, click Add next to User Group to add the hadoop, yarnviewgroup, and hadooppmanager user groups as needed, click Add next to Role to add the System_administrator, default, and the created role, and click OK. (If you create a Flink job user for the first time, log in to FusionInsight Manager as the user and change the password.)

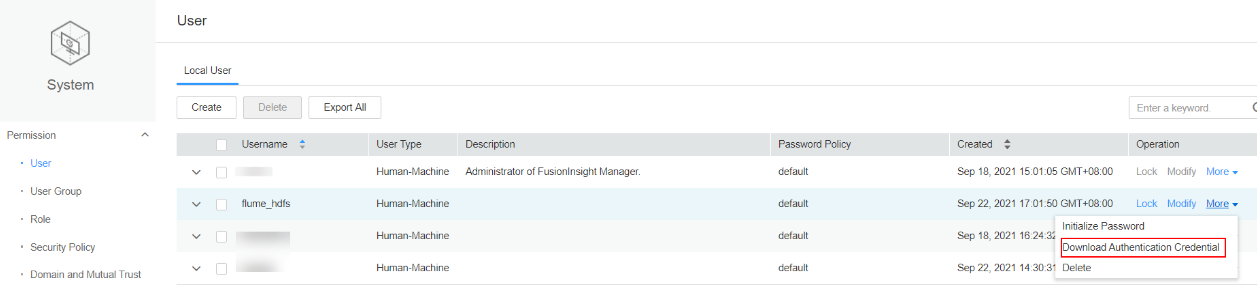

- Log in to Manager and download the authentication credential.

Log in to Manager and choose System > Permission > User. On the displayed page, locate the row that contains the added user, click More in the Operation column, and select Download Authentication Credential.

Figure 1 Downloading the authentication credential

- Decompress the downloaded authentication credential package and copy the obtained file to the client node, for example, the /opt/hadoopclient/Flink/flink/conf directory. If the client is installed on a node outside the cluster, copy the obtained files to the /etc/ directory on the node.

- Add the service IP address of the node where the client is installed and IP address of the master node to the jobmanager.web.access-control-allow-origin and jobmanager.web.allow-access-address configuration items in the /opt/hadoopclient/Flink/flink/conf/flink-conf.yaml file. Use commas (,) to separate the IP addresses.

jobmanager.web.access-control-allow-origin: xx.xx.xxx.xxx,xx.xx.xxx.xxx,xx.xx.xxx.xxx jobmanager.web.allow-access-address: xx.xx.xxx.xxx,xx.xx.xxx.xxx,xx.xx.xxx.xxx

To obtain the service IP address of the node where the client is installed, perform the following operations:

To obtain the service IP address of the node where the client is installed, perform the following operations:- Node inside the cluster:

In the navigation pane of the MRS management console, choose Active Clusters, select a cluster, and click its name to switch to the cluster details page.

On the Nodes tab page, view the IP address of the node where the client is installed.

- Node outside the cluster: IP address of the ECS where the client is installed.

- Node inside the cluster:

- Configure security authentication by adding the keytab path and username in the /opt/hadoopclient/Flink/flink/conf/flink-conf.yaml configuration file.

security.kerberos.login.keytab: <user.keytab file path> security.kerberos.login.principal: <Username>

Example:

security.kerberos.login.keytab: /opt/hadoopclient/Flink/flink/conf/user.keytab security.kerberos.login.principal: test

- In the bin directory of the Flink client, run the following commands to perform security hardening. Then, set a password for submitting jobs.

cd /opt/hadoopclient/Flink/flink/bin

sh generate_keystore.sh

The script automatically replaces the SSL value in the /opt/hadoopclient/Flink/flink/conf/flink-conf.yaml file.

After authentication and encryption, the flink.keystore and flink.truststore files are generated in the conf directory on the Flink client and the following configuration items are set to the default values in the flink-conf.yaml file:

After authentication and encryption, the flink.keystore and flink.truststore files are generated in the conf directory on the Flink client and the following configuration items are set to the default values in the flink-conf.yaml file:- Set security.ssl.keystore to the absolute path of the flink.keystore file.

- Set security.ssl.truststore to the absolute path of the flink.truststore file.

- Set security.cookie to a random password automatically generated by the generate_keystore.sh script.

- By default, security.ssl.encrypt.enabled is set to false in the flink-conf.yaml file by default. The generate_keystore.sh script sets security.ssl.key-password, security.ssl.keystore-password, and security.ssl.truststore-password to the password entered when the generate_keystore.sh script is called.

- If ciphertext is required and security.ssl.encrypt.enabled is true in the flink-conf.yaml file, the generate_keystore.sh script does not set security.ssl.key-password, security.ssl.keystore-password, and security.ssl.truststore-password. To obtain the values, use the Manager plaintext encryption API by running the following command: curl -k -i -u Username:Password -X POST -HContent-type:application/json -d '{"plainText":"Password"}' 'https://x.x.x.x:28443/web/api/v2/tools/encrypt'

In the preceding command, Username:Password indicates the user name and password for logging in to the system. The password of "plainText" indicates the one used to call the generate_keystore.sh script. x.x.x.x indicates the floating IP address of Manager. Commands containing authentication passwords pose security risks. Disable the command recording function (history) before running such commands to prevent information leakage.

- Configure paths for the client to access the flink.keystore and flink.truststore files.

- Relative path (recommended):

Perform the following steps to set the file path of flink.keystore and flink.truststore to the relative path and ensure that the directory where the Flink client command is executed can directly access the relative paths.

- Create a directory, for example, ssl, in /opt/hadoopclient/Flink/flink/conf/.

cd /opt/hadoopclient/Flink/flink/conf/

mkdir ssl

- Move the flink.keystore and flink.truststore files to the /opt/hadoopclient/Flink/flink/conf/ssl/ directory.

mv flink.keystore ssl/

mv flink.truststore ssl/

- Change the values of the following parameters to relative paths in the flink-conf.yaml file:

security.ssl.keystore: ssl/flink.keystore security.ssl.truststore: ssl/flink.truststore

- Create a directory, for example, ssl, in /opt/hadoopclient/Flink/flink/conf/.

- Absolute path:

After the generate_keystore.sh script is executed, the file path of flink.keystore and flink.truststore is automatically set to the absolute path /opt/hadoopclient/Flink/flink/conf/ in the flink-conf.yaml file. In this case, you need to move the flink.keystore and flink.truststore files from the conf directory to this absolute path on the Flink client and YARN nodes.

- Relative path (recommended):

- Prepare a user for submitting Flink jobs.

- Run a wordcount job.

To submit or run jobs on Flink, the user must have the following permissions:

- If Ranger authentication is enabled, the current user must belong to the hadoop group or the user has been granted the /flink read and write permissions in Ranger.

- If Ranger authentication is disabled, the current user must belong to the hadoop group.

- For a normal cluster (Kerberos authentication disabled), you can submit jobs in either of the following ways:

- Run the following commands to start a session and submit a job in the session:

yarn-session.sh -nm "session-name" -d

flink run /opt/hadoopclient/Flink/flink/examples/streaming/WordCount.jar

- Run the following command to submit a single job on Yarn:

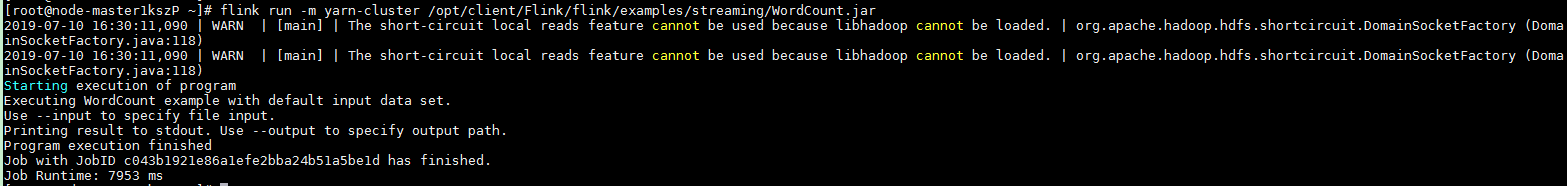

flink run -m yarn-cluster /opt/hadoopclient/Flink/flink/examples/streaming/WordCount.jar

- Run the following commands to start a session and submit a job in the session:

- For a security cluster (Kerberos authentication enabled), you can submit jobs in either of the following ways based on the paths of the flink.keystore and flink.truststore files:

- If the flink.keystore and flink.truststore files are stored in the relative path:

- Run the following command in the directory at the same level as ssl to start the session and submit the job in the session:

ssl is a relative path. For example, if ssl is in opt/hadoopclient/Flink/flink/conf/, run the command in the opt/hadoopclient/Flink/flink/conf/ directory.

cd /opt/hadoopclient/Flink/flink/conf

yarn-session.sh -t ssl/ -nm "session-name" -d

flink run /opt/hadoopclient/Flink/flink/examples/streaming/WordCount.jar

- Run the following command to submit a single job on Yarn:

cd /opt/hadoopclient/Flink/flink/conf

flink run -m yarn-cluster -yt ssl/ /opt/hadoopclient/Flink/flink/examples/streaming/WordCount.jar

- Run the following command in the directory at the same level as ssl to start the session and submit the job in the session:

- If the flink.keystore and flink.truststore files are stored in the absolute path:

- Run the following commands to start a session and submit a job in the session:

cd /opt/hadoopclient/Flink/flink/conf

yarn-session.sh -nm "session-name" -d

flink run /opt/hadoopclient/Flink/flink/examples/streaming/WordCount.jar

- Run the following command to submit a single job on Yarn:

flink run -m yarn-cluster /opt/hadoopclient/Flink/flink/examples/streaming/WordCount.jar

- Run the following commands to start a session and submit a job in the session:

- If the flink.keystore and flink.truststore files are stored in the relative path:

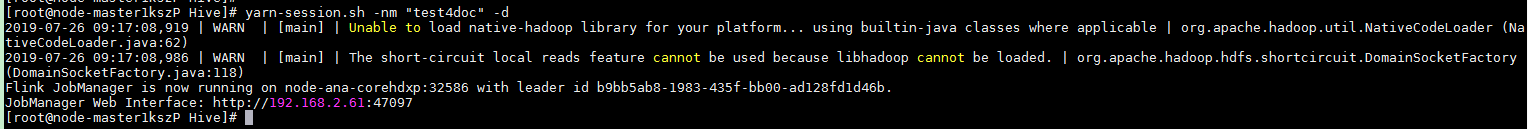

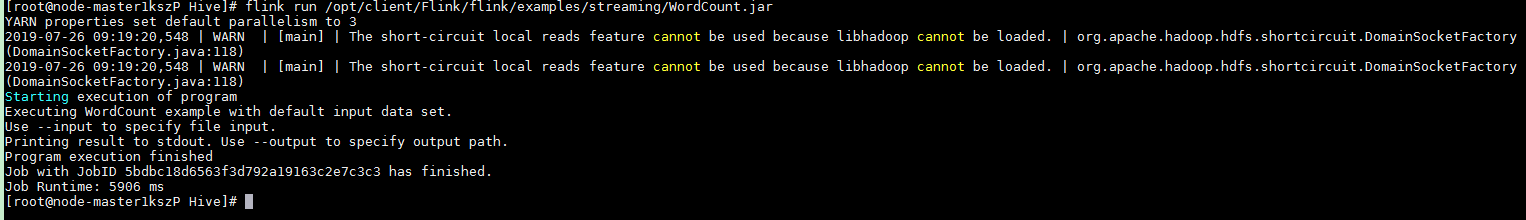

- After the job has been successfully submitted, the following information is displayed on the client:

Figure 2 Job submitted successfully on Yarn

Figure 3 Session started successfully

Figure 3 Session started successfully Figure 4 Job submitted successfully in the session

Figure 4 Job submitted successfully in the session

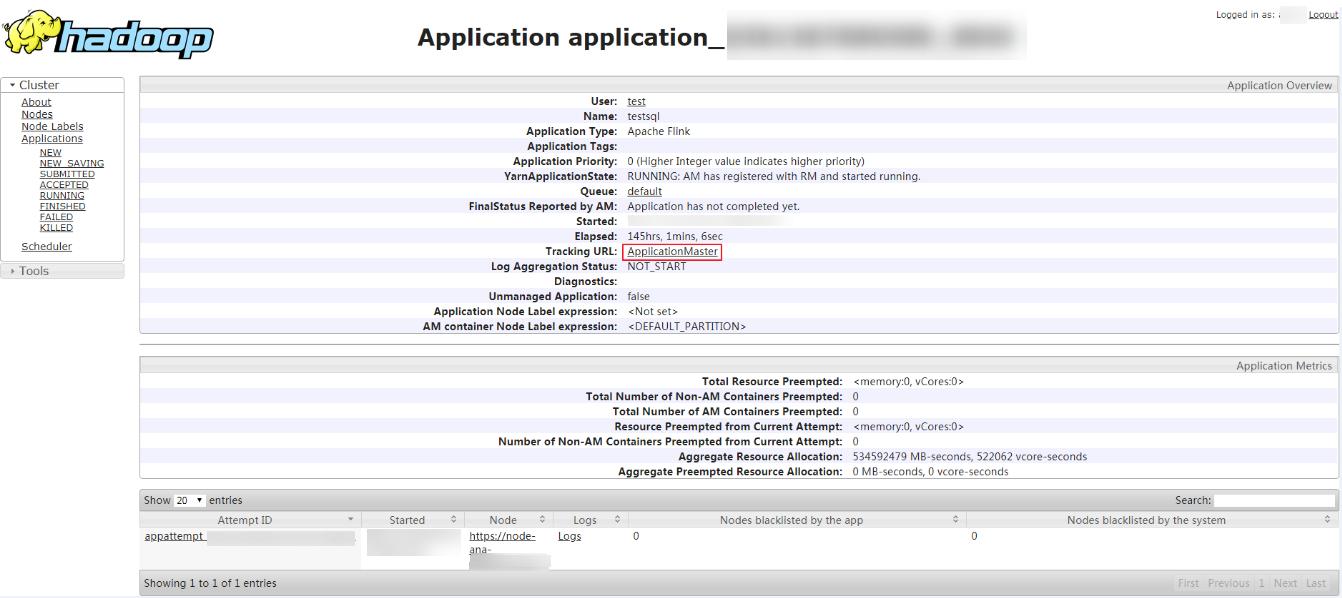

- Go to the native page of the YARN service, find the application of the corresponding job, and click the application name to go to the job details page.

- If the job is not completed, click Tracking URL to go to the native Flink page and view the job running information.

- If the job submitted in a session has been completed, you can click Tracking URL to log in to the native Flink service page to view job information.

Figure 5 application

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.