Connecting Hortonworks HDP to OBS

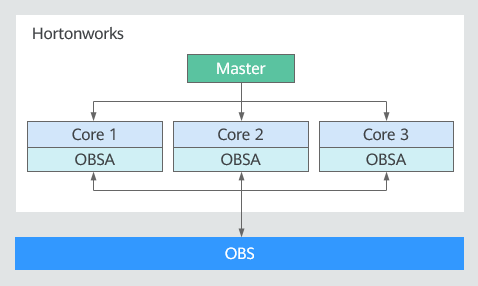

Deployment View

Version Information

Hardware: 1 Master + 3 Cores (flavor: 8U32G; OS: CentOS 7.5)

Software: Ambari 2.7.1.0 and HDP 3.0.1.0

Deployment View

Updating OBSA-HDFS

- Download the OBSA-HDFS that matches the Hadoop version.

Download the OBSA-HDFS JAR package (for example, hadoop-huaweicloud-3.1.1-hw-53.8.jar) to the /mnt/obsjar directory.

- In a hadoop-huaweicloud-x.x.x-hw-y.jar package name, x.x.x indicates the Hadoop version number, and y indicates the OBSA version number. For example, in hadoop-huaweicloud-3.1.1-hw-53.8.jar, 3.1.1 is the Hadoop version number, and 53.8 is the OBSA version number.

- If the Hadoop version is 3.1.x, select hadoop-huaweicloud-3.1.1-hw-53.8.jar.

- Copy the downloaded OBSA-HDFS JAR package to the following directories:

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /usr/hdp/share/hst/activity-explorer/lib/

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /usr/hdp/3.0.1.0-187/hadoop-mapreduce/

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /usr/hdp/3.0.1.0-187/spark2/jars/

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /usr/hdp/3.0.1.0-187/tez/lib/

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /var/lib/ambari-server/resources/views/work/CAPACITY-SCHEDULER{1.0.0}/WEB-INF/lib/

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /var/lib/ambari-server/resources/views/work/FILES{1.0.0}/WEB-INF/lib/

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /var/lib/ambari-server/resources/views/work/WORKFLOW_MANAGER{1.0.0}/WEB-INF/lib/

ln -s /usr/hdp/3.0.1.0-187/hadoop-mapreduce/hadoop-huaweicloud-3.1.1-hw-53.8.jar /usr/hdp/3.0.1.0-187/hadoop-mapreduce/hadoop-huaweicloud.jar

Adding Configuration Items to the HDFS Cluster

- Add configuration items in file Custom core-site.xml to the ADVANCED in the HDFS cluster's CONFIGS. These items include fs.obs.access.key, fs.obs.secret.key, fs.obs.endpoint, and fs.obs.impl.

- fs.obs.access.key, fs.obs.secret.key, and fs.obs.endpoint indicate the AK, SK, and endpoint respectively. Enter the actually used AK/SK pair and endpoint. To obtain them, see Access Keys (AK/SK) and Endpoints and Domain Names, respectively.

- Set fs.obs.impl to org.apache.hadoop.fs.obs.OBSFileSystem.

- Restart the HDFS cluster.

Adding Configuration Items to the MapReduce2 Cluster

- In the mapred-site.xml file under ADVANCED in CONFIGS of the MapReduce2 cluster, change the value of mapreduce.application.classpath to /usr/hdp/3.0.1.0-187/hadoop-mapreduce/*.

- Restart the MapReduce2 cluster.

Adding a JAR Package for Connecting Hive to OBS

- Create the auxlib folder on the Hive Server node:

mkdir /usr/hdp/3.0.1.0-187/hive/auxlib

- Save the OBSA-HDFS JAR package to the auxlib folder:

cp /mnt/obsjar/hadoop-huaweicloud-3.1.1-hw-53.8.jar /usr/hdp/3.0.1.0-187/hive/auxlib

- Restart the Hive cluster.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.